What's a Routing AI agent?

A Routing AI agent is an LLM-powered bot that’s focused on routing the consumer to the appropriate bot or agent who can help them with their query.

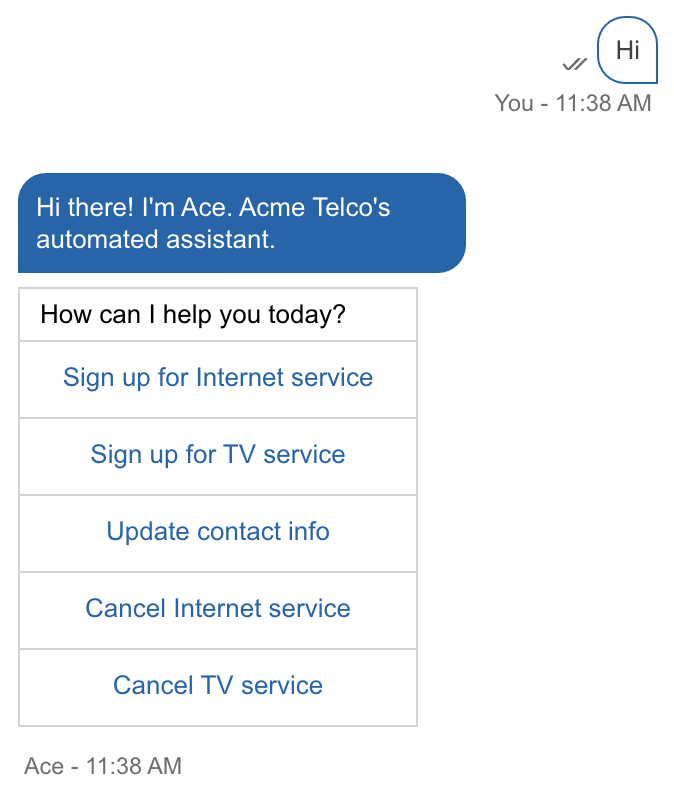

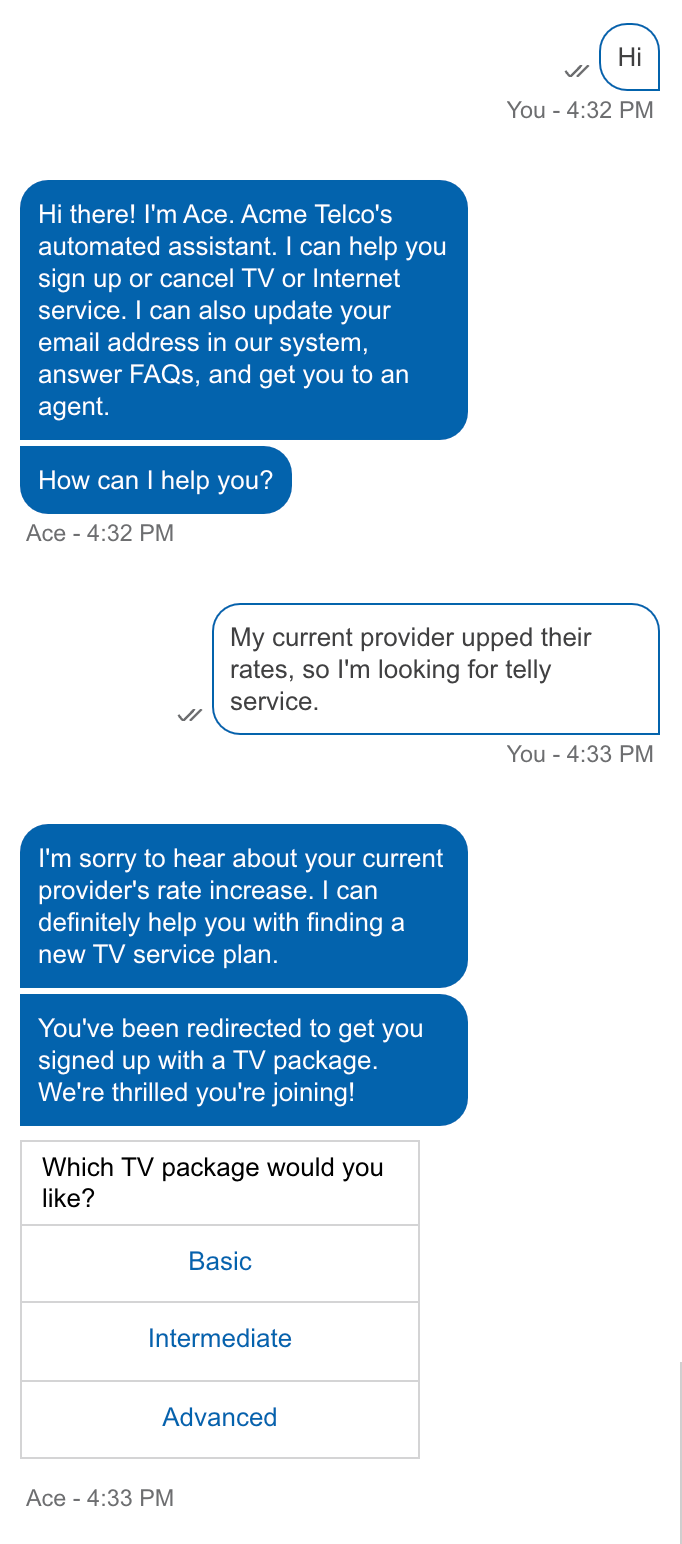

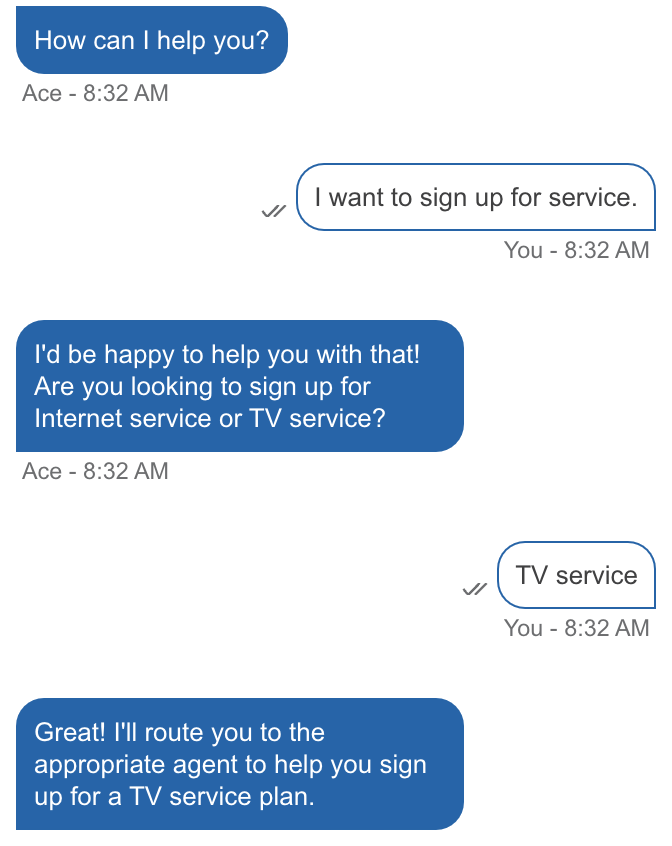

Historically, rule-based bots that use Natural Language Understanding (NLU) have been the industry standard for handling routing. They require that you invest heavily in setting up and refining an NLU intent model. Then, in the bot, you must specify the intents that trigger the various bot flows. For the consumer, the routing experience is often menu-driven and looks like this:

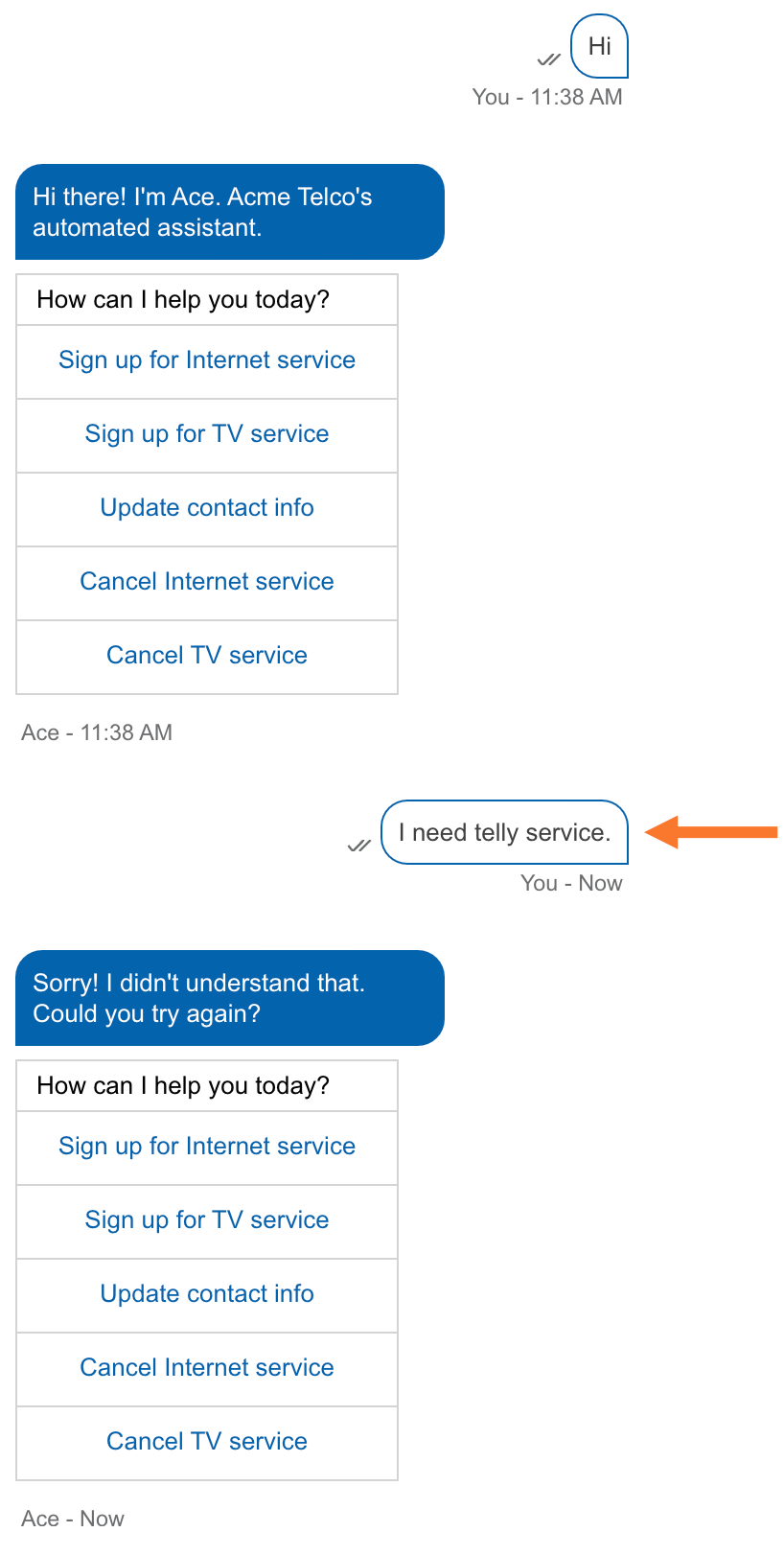

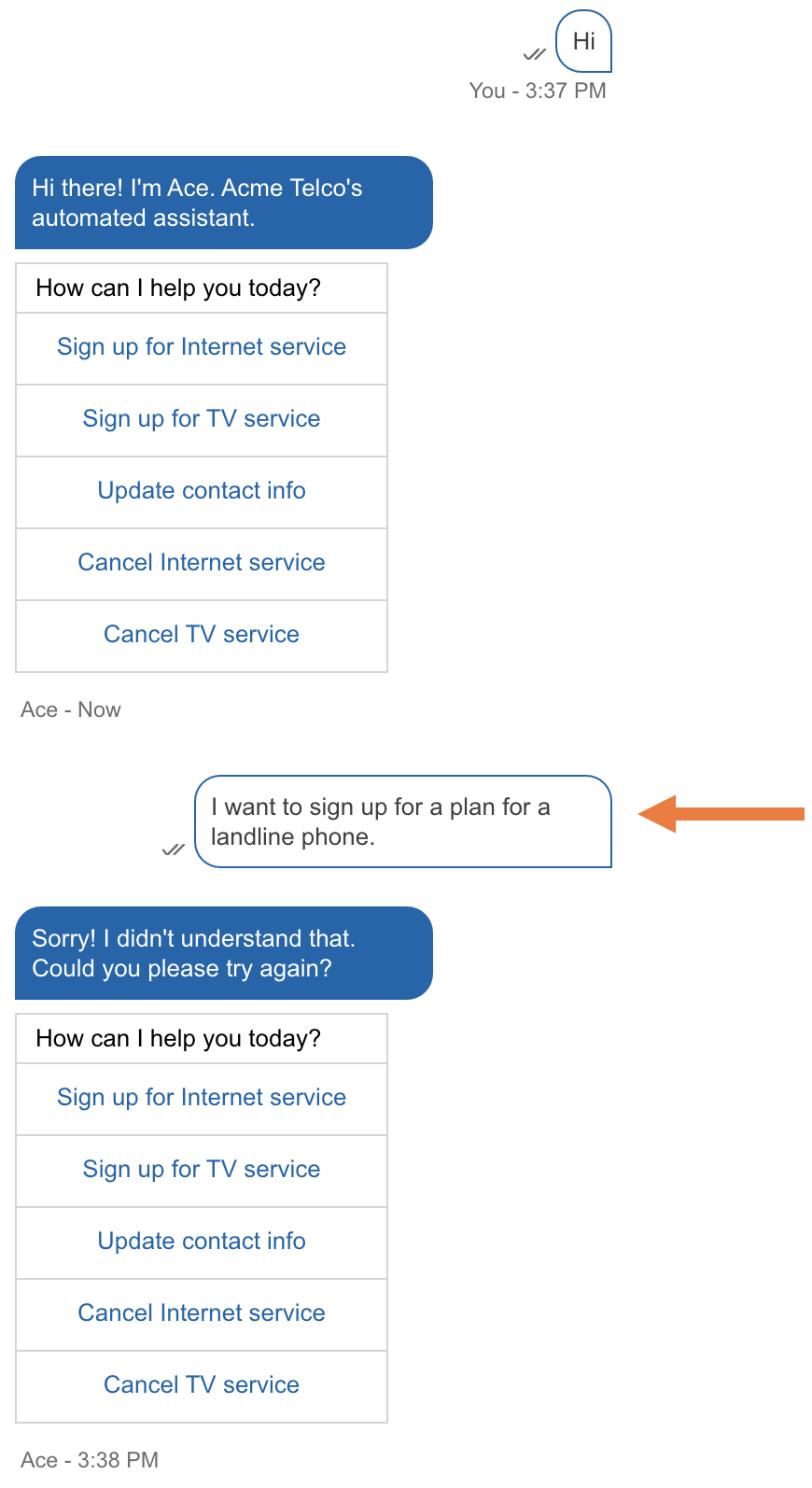

The traditional approach can work, but it’s highly deterministic, often brittle, and, therefore, problematic. It also fails to account for the complexity and variability of natural human conversation. Consumers can express things in ways not accounted for by the intent model or the bot, for example:

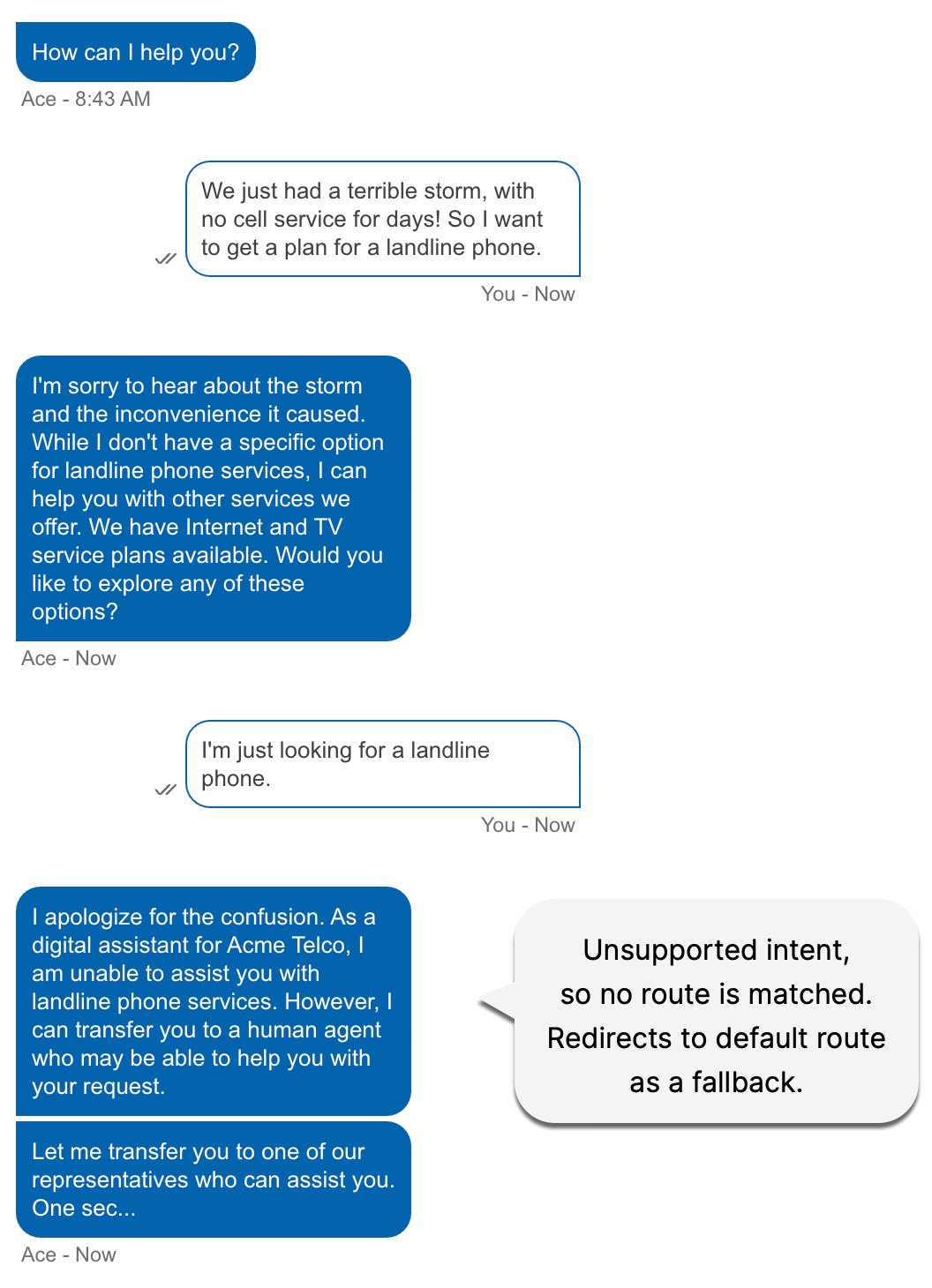

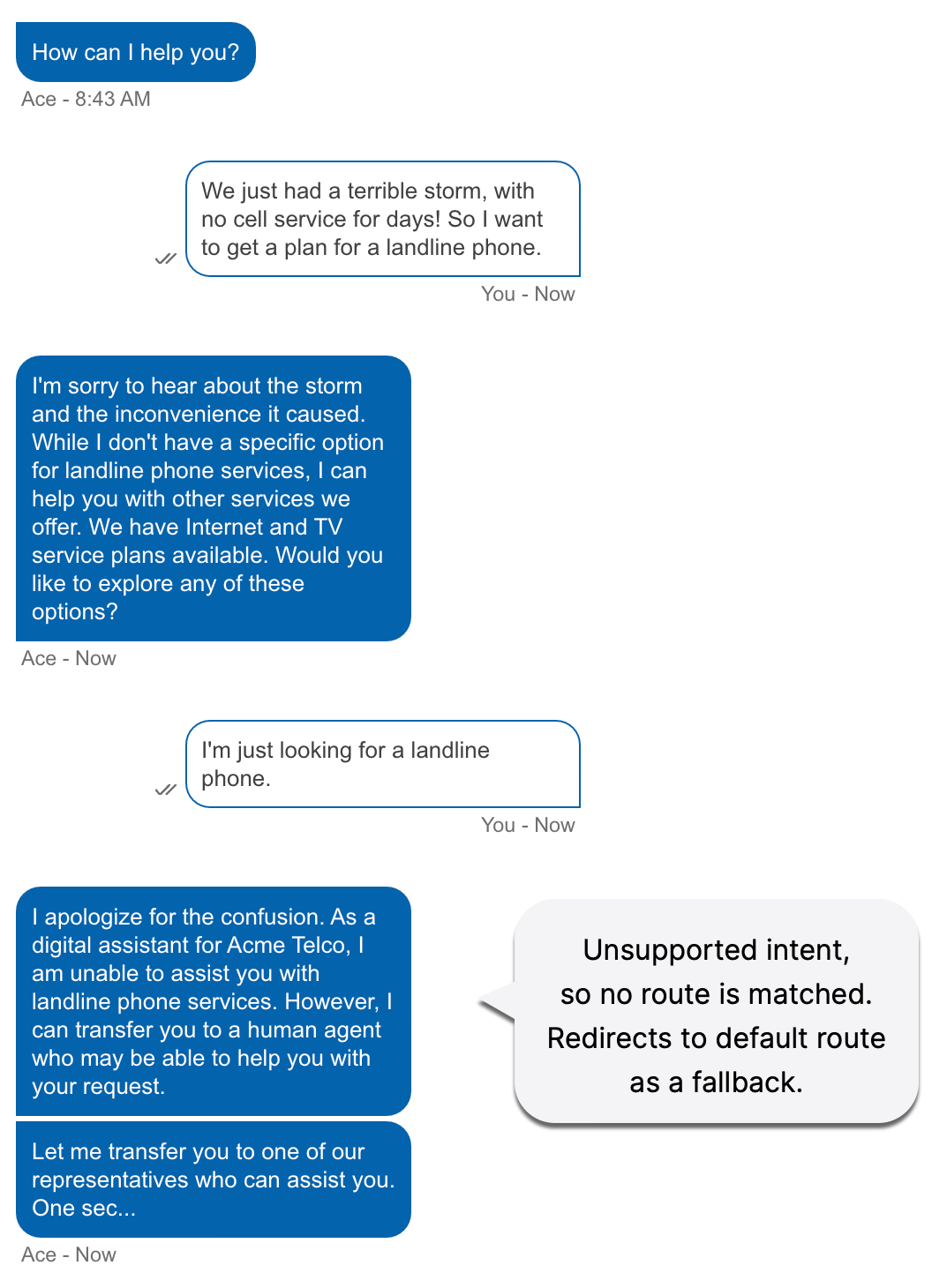

Sometimes, consumers don’t even know what they want. And when they do, some of their intentful queries fall outside of what the bot can handle, like this:

Overall, the traditional NLU-based, rule-based approach is rigid and unsatisfactory, especially with consumer expectations now set much higher for AI engagement due to the arrival of Generative AI.

A Routing AI agent represents a paradigm shift in routing for both the bot builder and the consumer:

- For the bot builder, gone are the days of building out a vast intent model. Instead, the bot leverages an LLM to discern the consumer’s intent and to route the consumer to the right flow for resolution. What’s more, unlike a traditional bot, the Routing AI agent doesn’t respond with predefined responses. It dynamically generates its responses as it adapts to the complex conversation thread. Thus, it can handle a wide range of queries with unprecedented flexibility. As the bot builder, your tasks shift to route definition and prompt engineering.

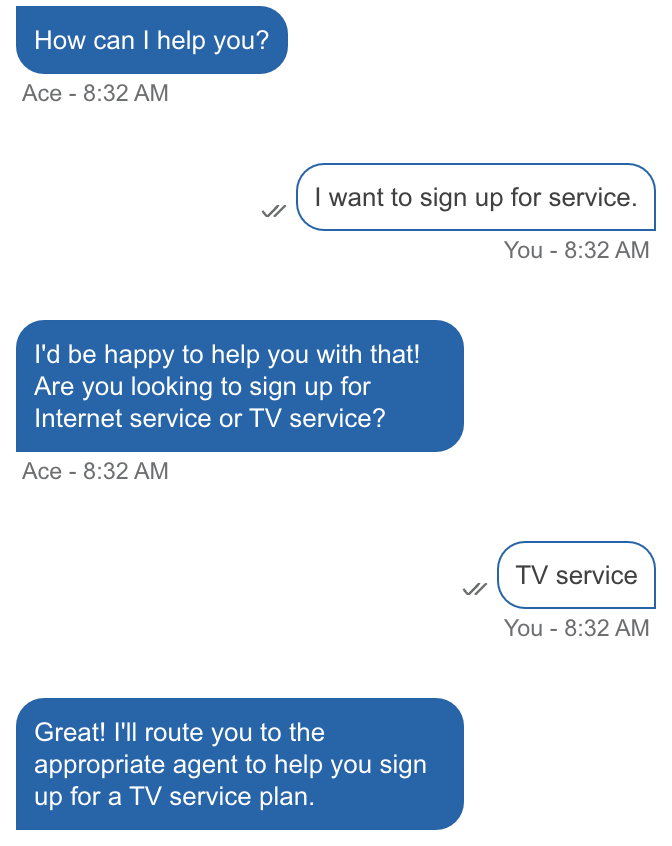

- For the consumer, the experience is much more conversational. The bot engages the consumer with language that’s natural and human-like. No more routing menus. No more canned, unsatisfactory responses like, “Sorry, I didn’t quite understand that. Could you try again?”

Let’s revisit the messaging conversations illustrated above, add a bit more complexity to them, and see what’s possible with a Routing AI agent.

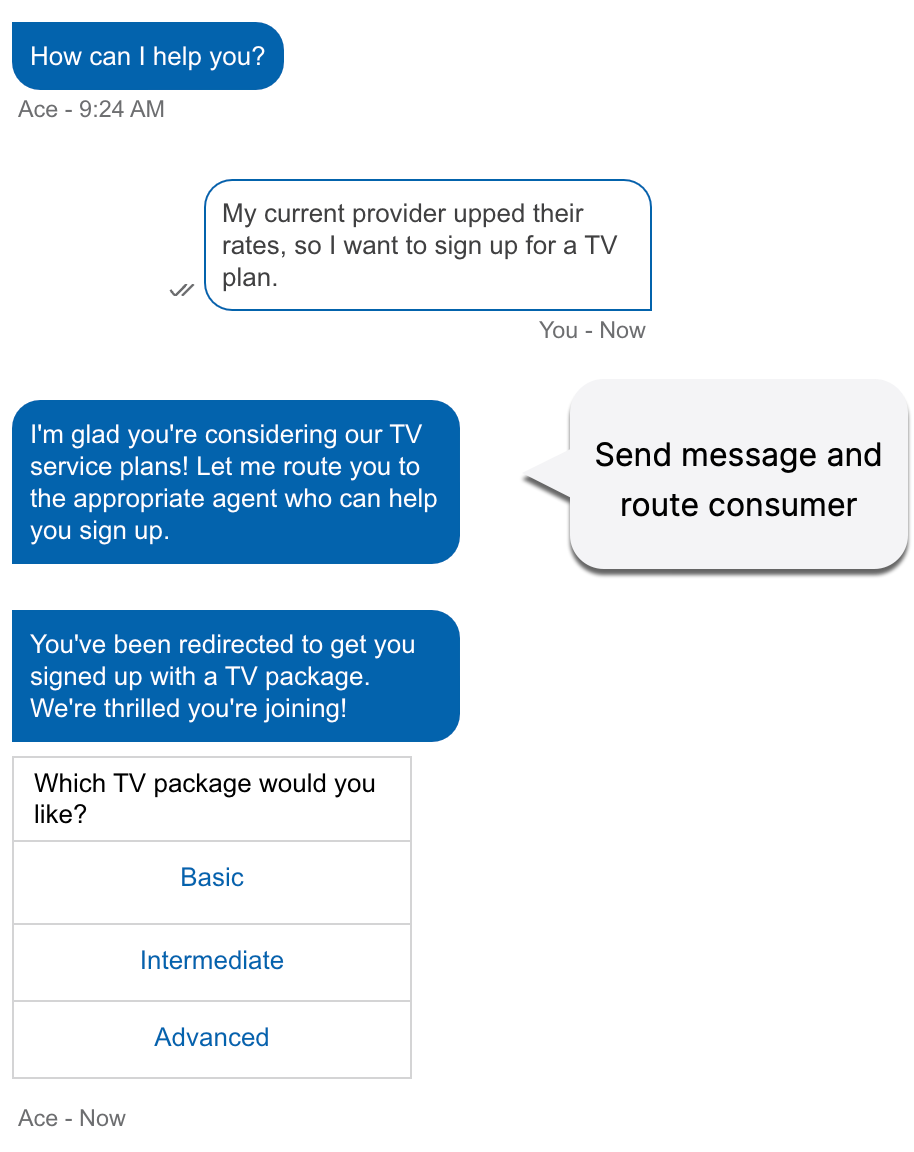

With its LLM-powered language capabilities, here’s how the bot handles the request for telly service:

Consumer intent understood. Warm response. No NLU intent model to set up and train.

And here’s how the bot gracefully deals with an intent that it isn’t designed to handle, in this case, a request for a landline phone:

Key capabilities

Advanced disambiguation capabilities

The Routing AI agent can ask clarifying questions to determine the most relevant routing destination, enhancing accuracy in fulfilling specific intents.

Customizable routing descriptions

In a Routing AI agent, you focus on tailoring the name and description of each routing option that you want to make available. The Routing AI agent takes care of the rest: discerning the consumer’s intent and routing them accordingly. There’s no need to anticipate and accommodate every conceivable scenario, as you must when building an intent-based, rule-based system. In short, the development is faster and cheaper, and the routing is more accurate.

Conversational context for warm start

The Routing AI agent retains and uses previous turns from the current conversation to interpret the most recent consumer message. Thus, it can engage the consumer with relevant and personalized dialogue.

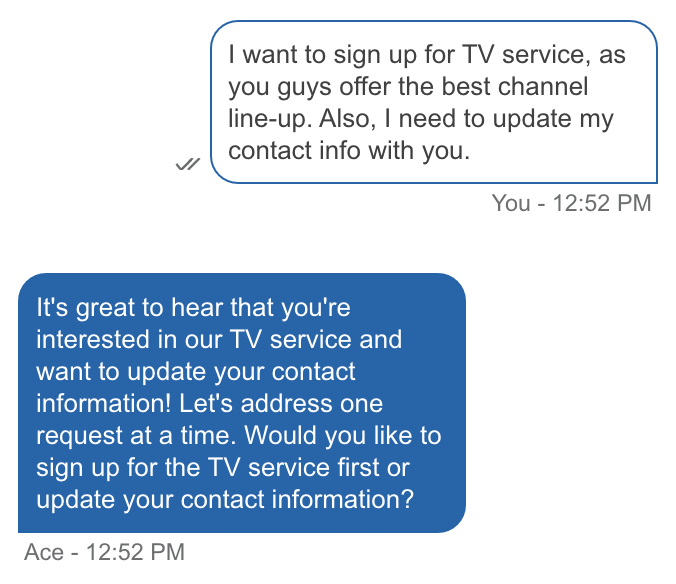

Multi-intent handling

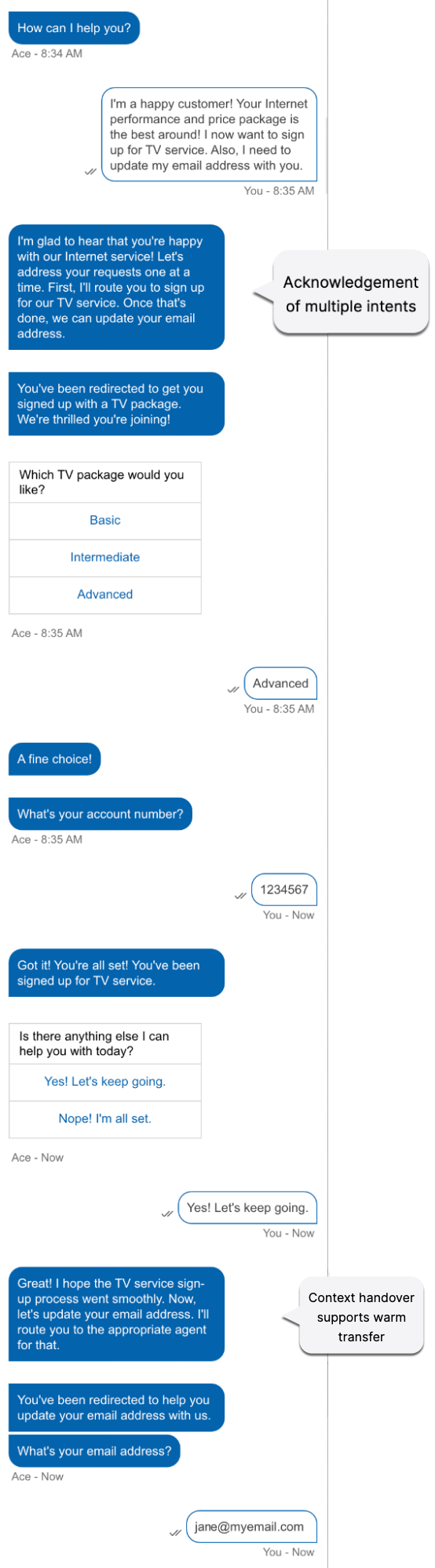

Routing AI agents can handle multiple intents, moving across dialogs or bots while maintaining continuity with respect to the routing experience:

When routing the consumer, the Routing AI agent maintains continuity by transferring relevant context. This ensures the hand-off is “warm.” Warm transfers offer several advantages:

- Smooth transitions

- Personalized assistance

- Improved customer experience

- Efficiency

- Increased trust

Key benefits

- Accelerate deployment to just a few weeks: Bypass the lengthy process of building and training a vast intent model, which typically takes months.

- Increase operational efficiency and service delivery, and reduce costs: Routing AI agents effectively decipher and address multiple, even ambiguous, consumer intents, and they transfer important context. These capabilities solve issues of wasted time, longer handling times, and increased consumer frustration.

- Enjoy higher FCR and CSAT scores.

Language support

Routing AI agents that use American English or British English are supported.

If your bot uses another language, support is experimental. Don’t hesitate to get started with exploring the capabilities of Generative AI, and stay tuned for support of additional languages. As always, proceed with care: Test thoroughly before rolling out to Production.

Unsupported features

- Export and import: Currently, export and import of bots, dialogs, and bot/dialog templates isn’t supported.

- Bot releases: While you can create versions of your Routing AI agent at crucial moments during bot development, you can’t use the Release feature to create a snapshot for the purpose of pushing that snapshot to a different bot (e.g., to your Production bot). Bot releases aren’t supported yet.

Stay tuned for upcoming changes on these fronts!

Prerequisites to building the bot

Activate our Generative AI features

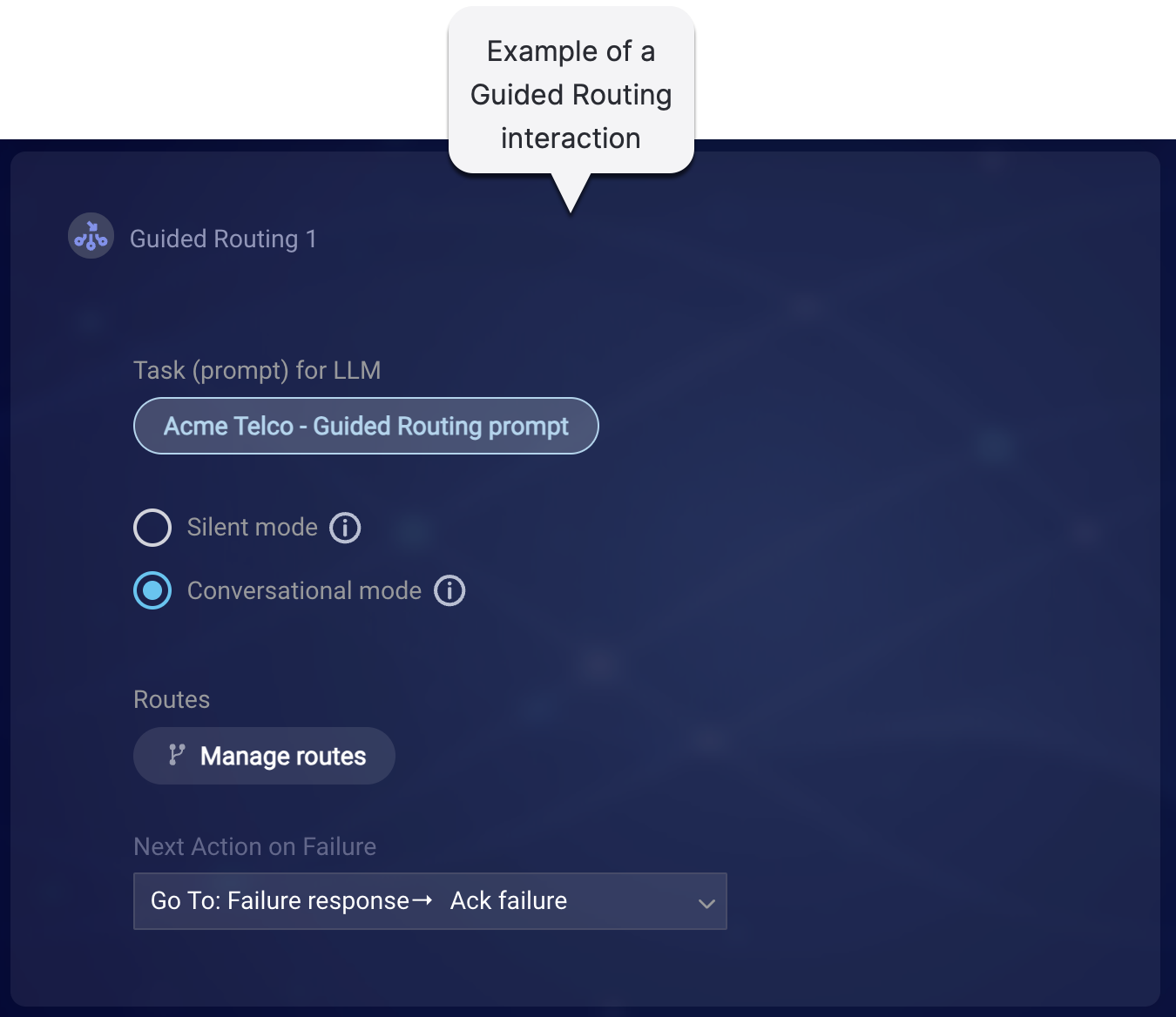

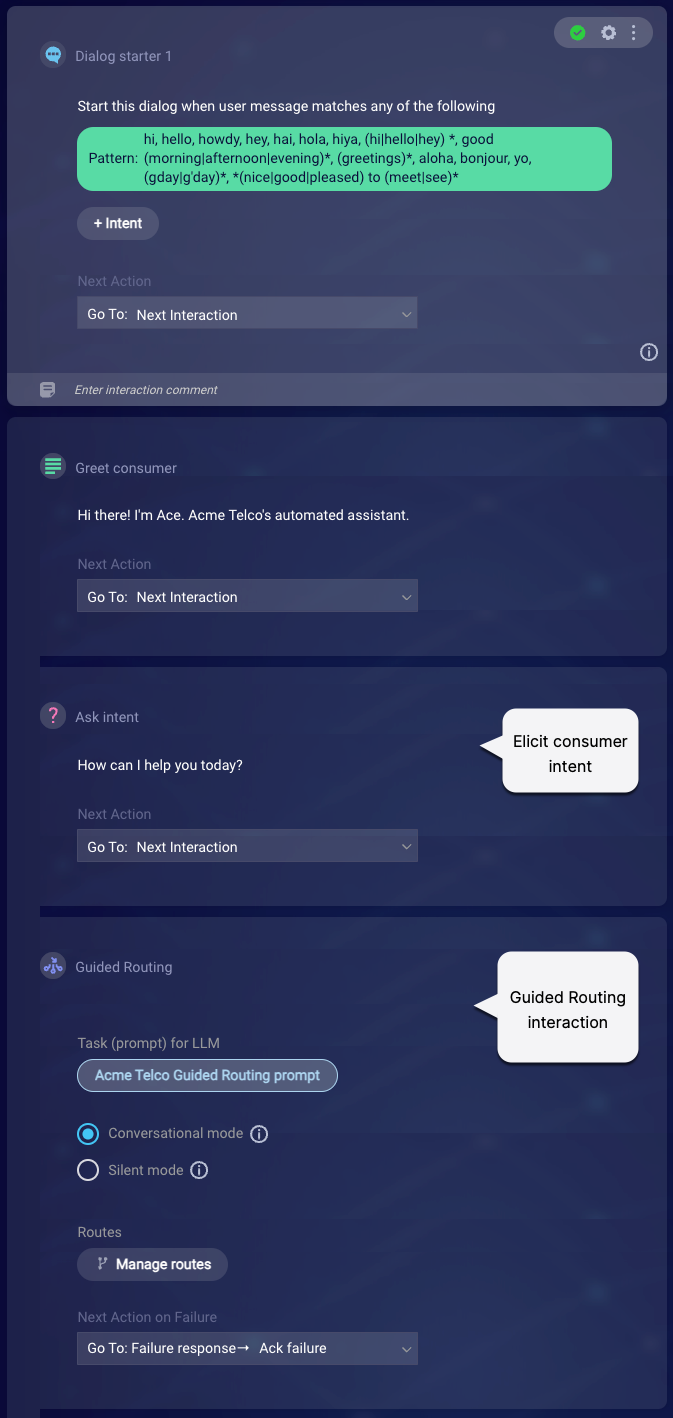

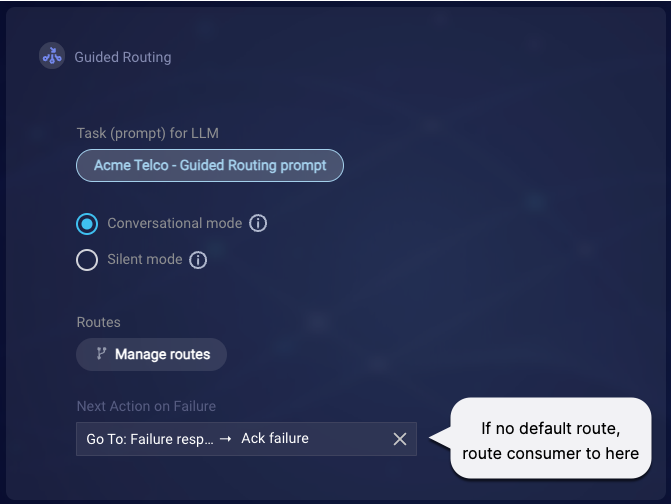

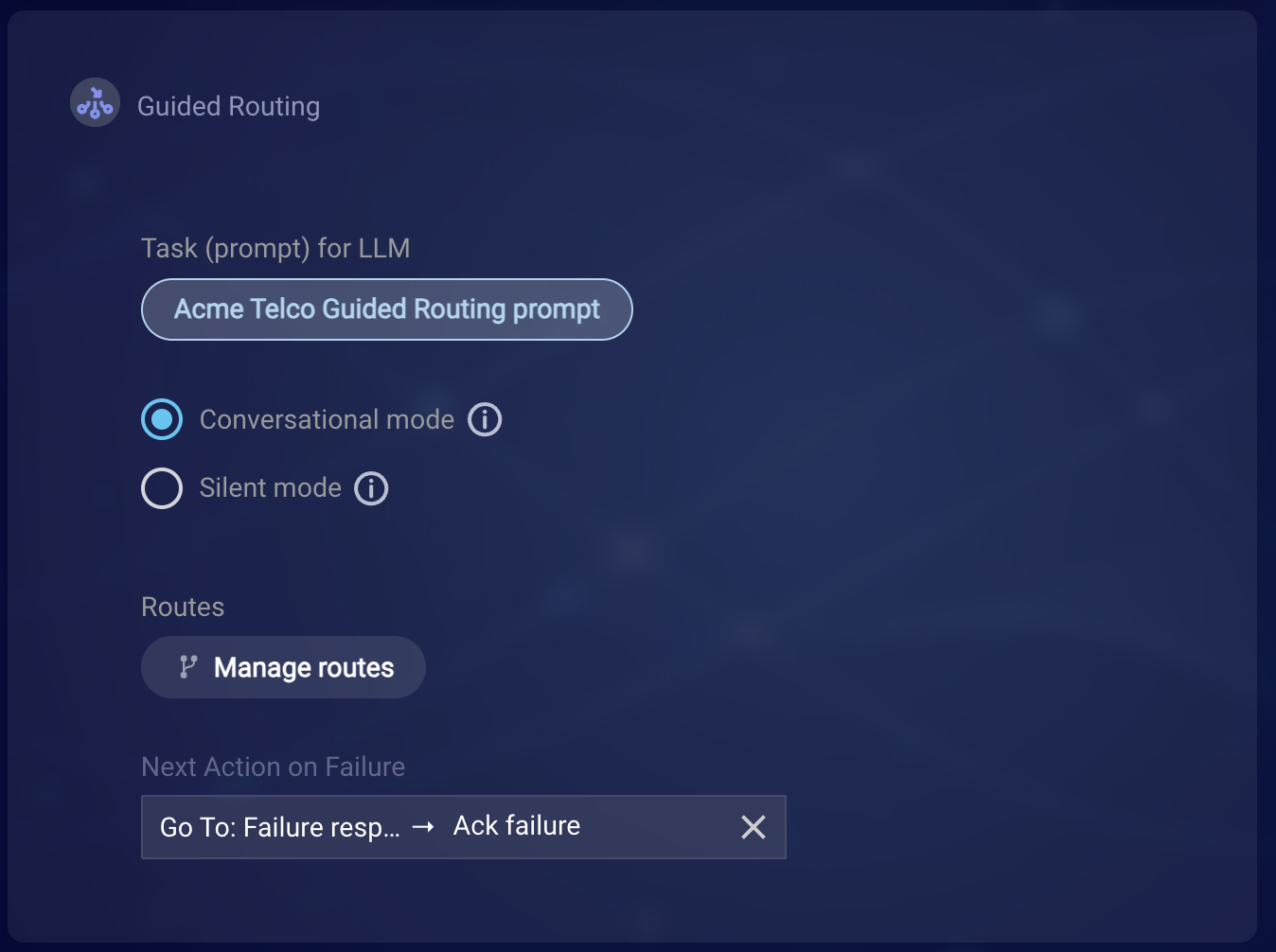

The key component in a Routing AI agent is a Guided Routing interaction:

In order to see this interaction in the tool palette in Conversation Builder, you must activate LivePerson’s Generative AI features.

Additionally, you must access Conversation Builder by logging into Conversational Cloud (our single-sign approach). Brands that log into Conversation Builder directly don’t have access to the Guided Routing interaction.

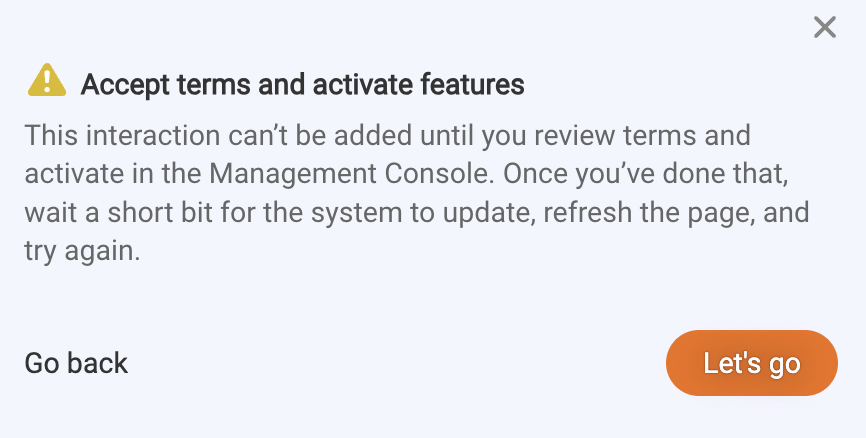

Accept terms regarding Routing AI agents

If your organization hasn’t already accepted applicable terms for using Routing AI agents via a direct, offline process with LivePerson, you can’t add the Guided Routing interaction to a bot. Instead, you’ll see this in Conversation Builder when you try:

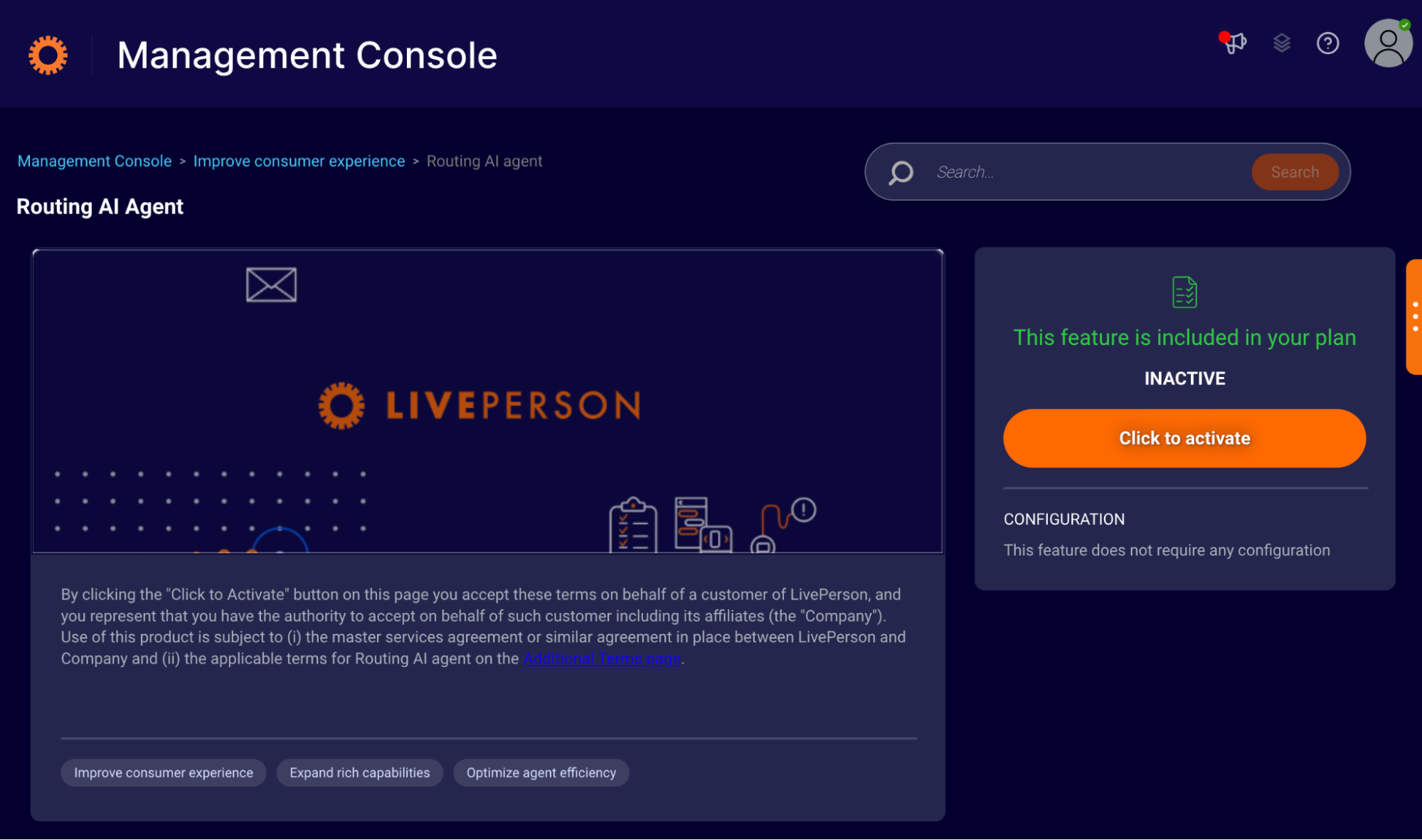

You can get rolling by accepting and activating online: Clicking Let’s go takes you to the Management Console, where you can review the terms:

If you accept the terms on behalf of your brand:

- Click Click to activate.

- Give the system 5-10 minutes to update itself.

- Manually refresh the page.

- Return to Conversation Builder and retry the task of adding the interaction.

Acceptance of the terms and activation are one-time-only tasks for your account.

Prerequisites to a Production rollout

Feel free to experiment, build, and test your Routing AI agent as much as needed. However, before you roll it out for use with live traffic in Production, it’s vital that you contact your LivePerson representative! Please fill out this form to schedule a meeting with us.

We want to ensure that your Routing AI Agent won’t be adversely impacted by the capacity constraints of the third-party LLM that’s used. Please collaborate with us regarding your plans and the expected traffic, so we can work together to ensure a successful release.

Flow diagram

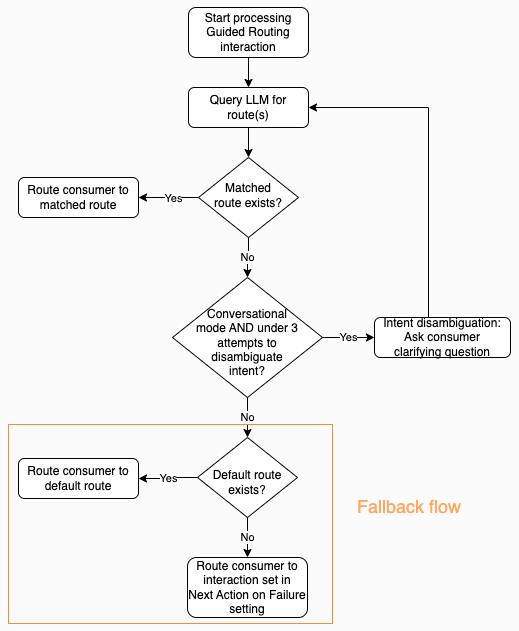

The following diagram visualizes the Guided Routing flow, including the logical fallback flow. Sections that follow dive into greater detail.

Intent detection and routing

Routing basics

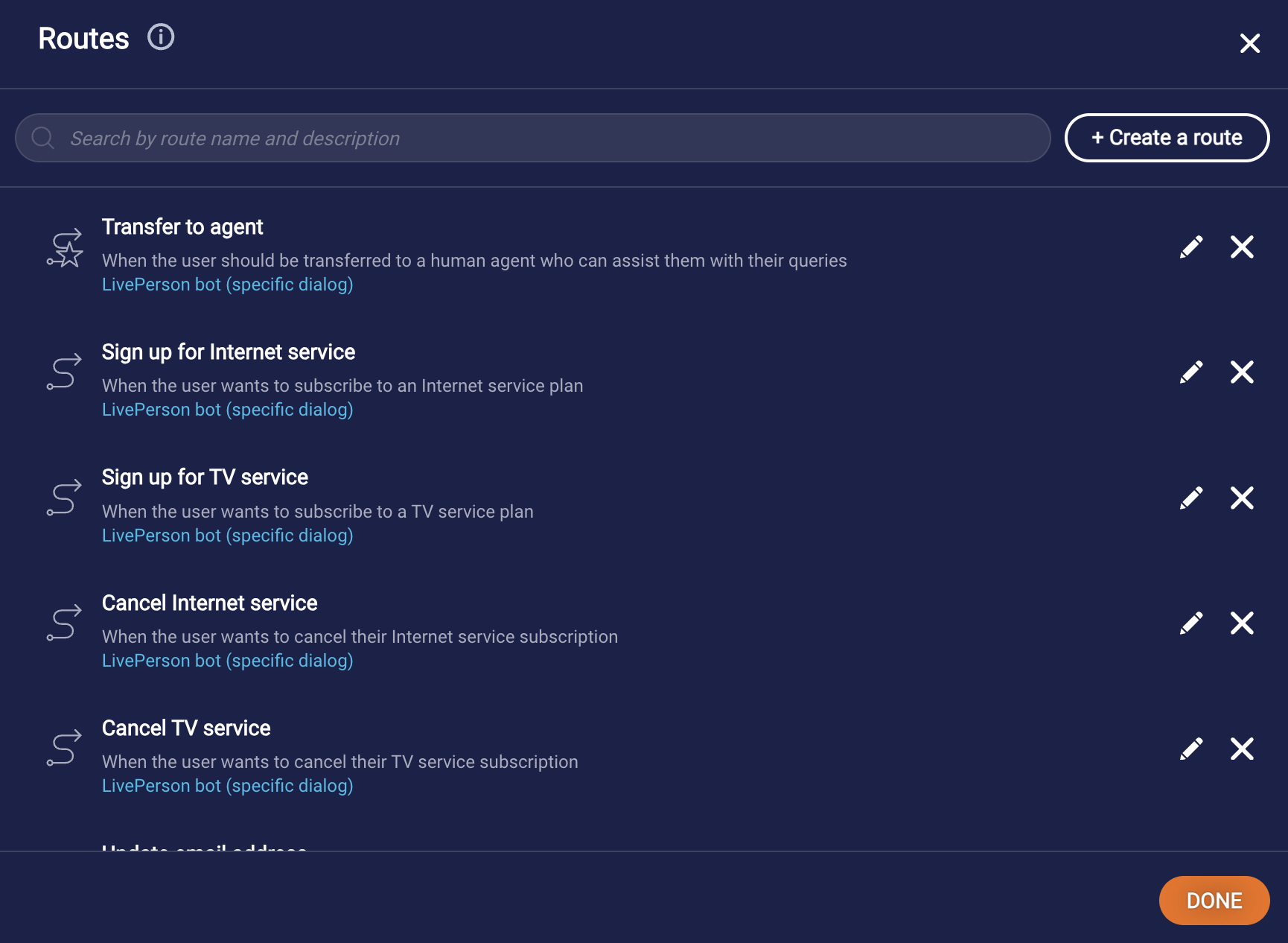

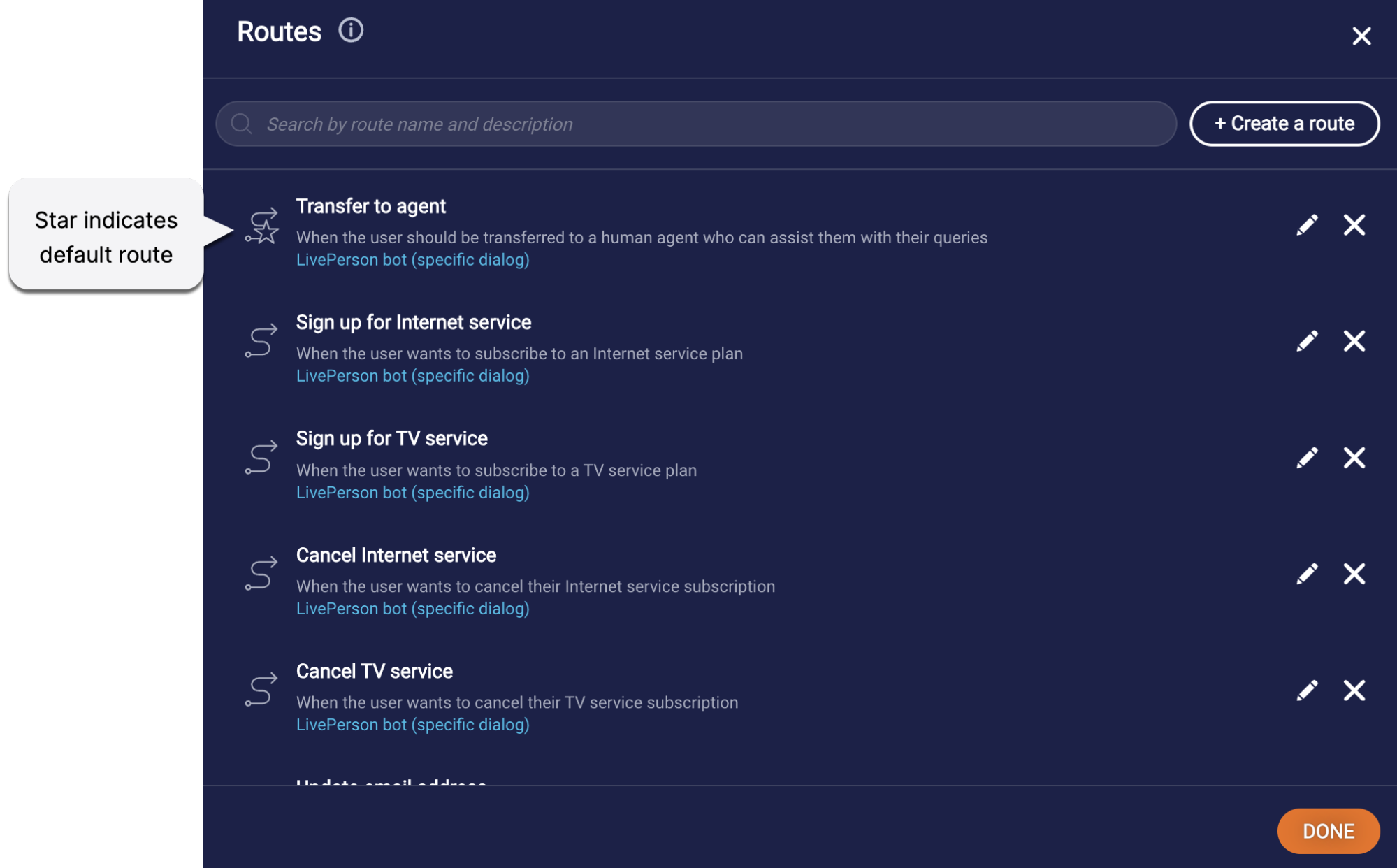

The routes defined in the Guided Routing interaction power the intent detection and routing experience.

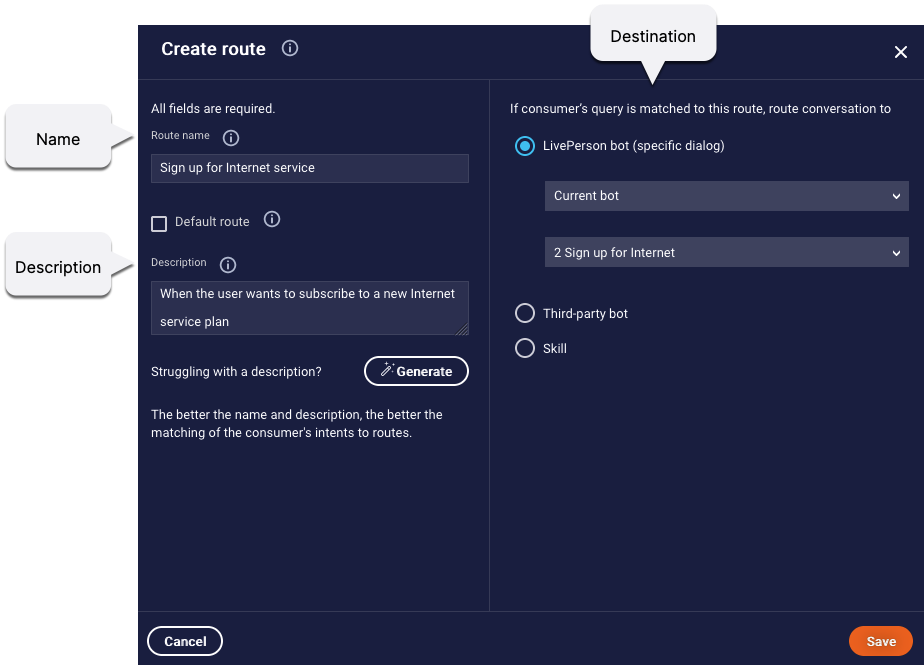

Every route has 3 components:

- A name

- A description

- A destination

The LLM determines where to route the consumer by matching the consumer’s query to one or more routes. This is done by evaluating the query against the names and descriptions of each defined route.

If a matched route is found, the consumer is routed to the destination.

Conversational versus Silent mode

The Guided Routing interaction can work in Conversational mode or Silent mode.

Conversational mode

- The bot uses the last 10 messages between the bot and the consumer to query the LLM to determine the best route for the consumer’s intent.

- The bot sends a message to the consumer to acknowledge the intent and routes the consumer to the matched route.

Silent mode

- The bot uses the last 10 messages between the bot and the consumer to query the LLM to determine the best route for the consumer’s intent.

- The bot routes the consumer silently. It doesn’t engage in any dialogue with the consumer.

Silent mode works a lot like the NLU-based intent detection in a traditional bot in that the consumer’s message is matched to an intent, and the consumer is routed accordingly. There’s no natural language response that is generated and sent to the consumer. That said, Silent mode in a Routing AI agent performs better.

The importance of the consumer’s most recent message

The Routing AI agent works to discern the consumer’s intent in their most recent message to determine how to route them. So ensure you use the Guided Routing interaction in an appropriate location in the bot, and in an appropriate mode (Conversational or Silent) for that location.

For example, you might have a concierge bot that greets the consumer. It has a Welcome dialog that’s triggered via a pattern match to various forms of “hello.” Following the dialog, there’s a question that asks, “How can I help you today?” In this case, it makes sense to insert the Guided Routing interaction immediately after the question because the answer to the question is appropriate to pass to the Guided Routing flow.

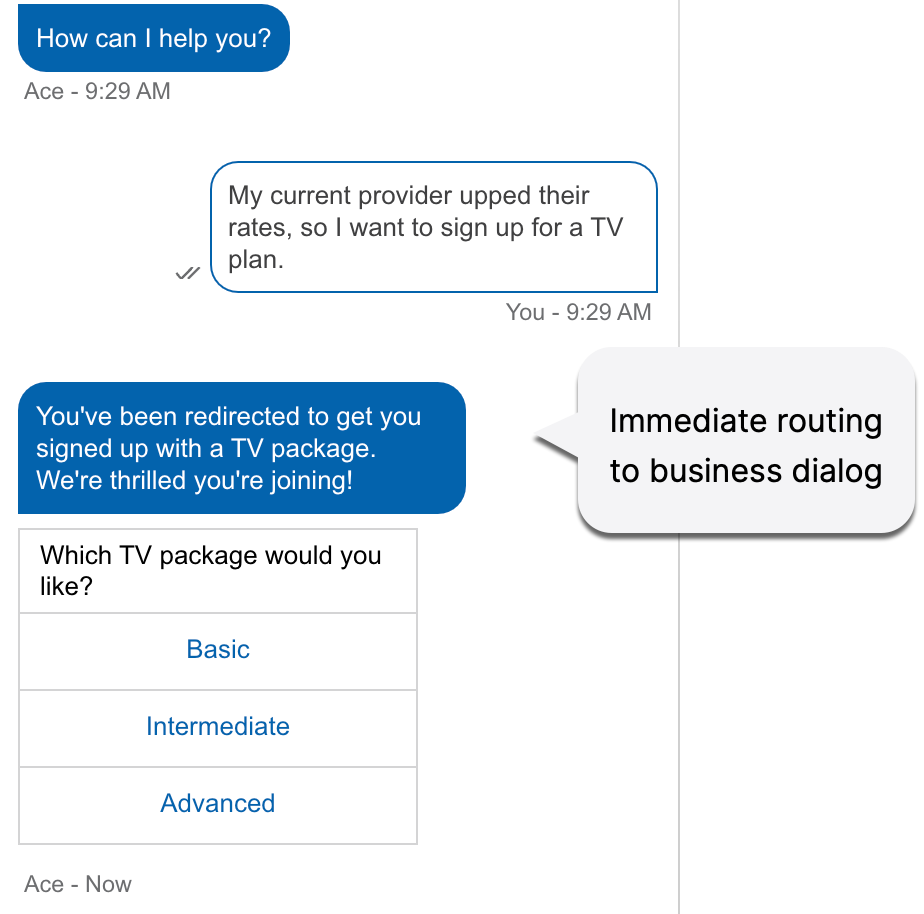

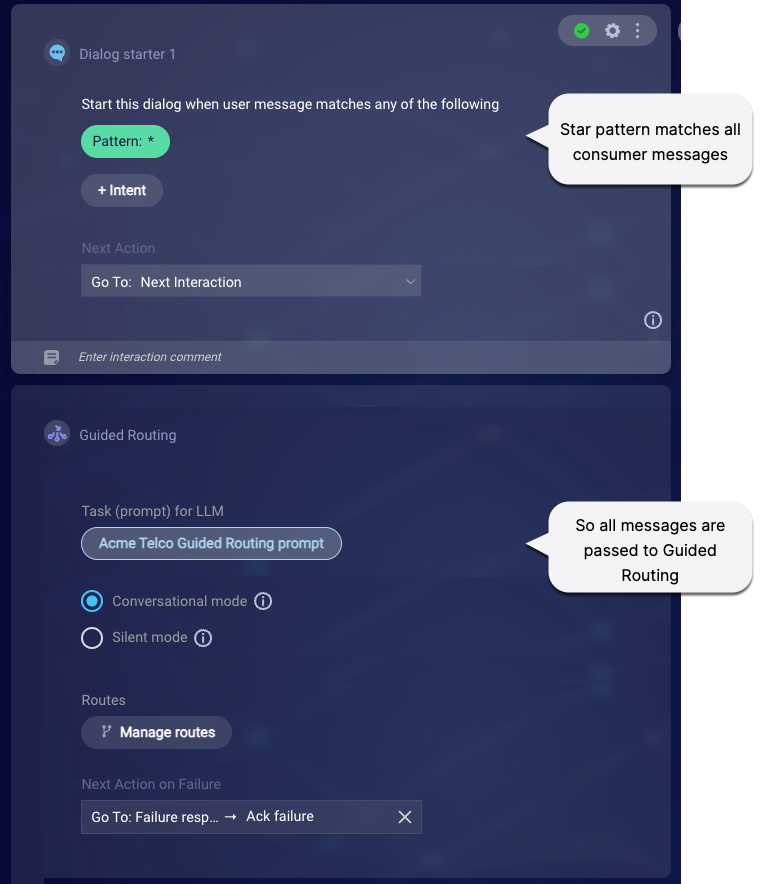

On the other hand, you might have a concierge bot that likewise greets the consumer. It has a Welcome dialog that’s triggered via the “ * “ (wildcard) pattern, which means the dialog is always triggered no matter what the consumer says. In this case, you could insert the Guided Routing interaction immediately after the dialog starter. No question is needed.

In this second case, the consumer might begin with an intentful message, such as, “Hi! Can I sign up for TV service?” But they might also start off with just, “Hi!” Why does the latter work? It’s perfectly fine as long as the Guided Routing interaction is in Conversational mode, and it showcases well the power of the Routing AI agent. If the bot can’t determine how to route the consumer based on just “Hi!”—and, of course, it can’t—then the bot will attempt to clarify the intent with the consumer by asking them a question. This is known as intent disambiguation.

The importance of the conversation context

The Routing AI agent relies on previous turns from the current conversation to interpret the consumer’s last message. It also uses this conversational context to generate a response to send to the consumer. For these reasons, the last 10 messages between the bot and the consumer are always sent in the prompt to the LLM.

Currently, it’s not possible to customize this number of messages.

Fallback flows for failures

There are 2 kinds of failures:

- Logical failures: These are situations where the bot can’t match the consumer’s query to one of the defined routes.

- System failures: These are related to runtime processes. The errors can be external (e.g., the LLM is not responding) or internal (e.g., a Conversation Builder error occurred when processing the interaction).

Logical failure flow

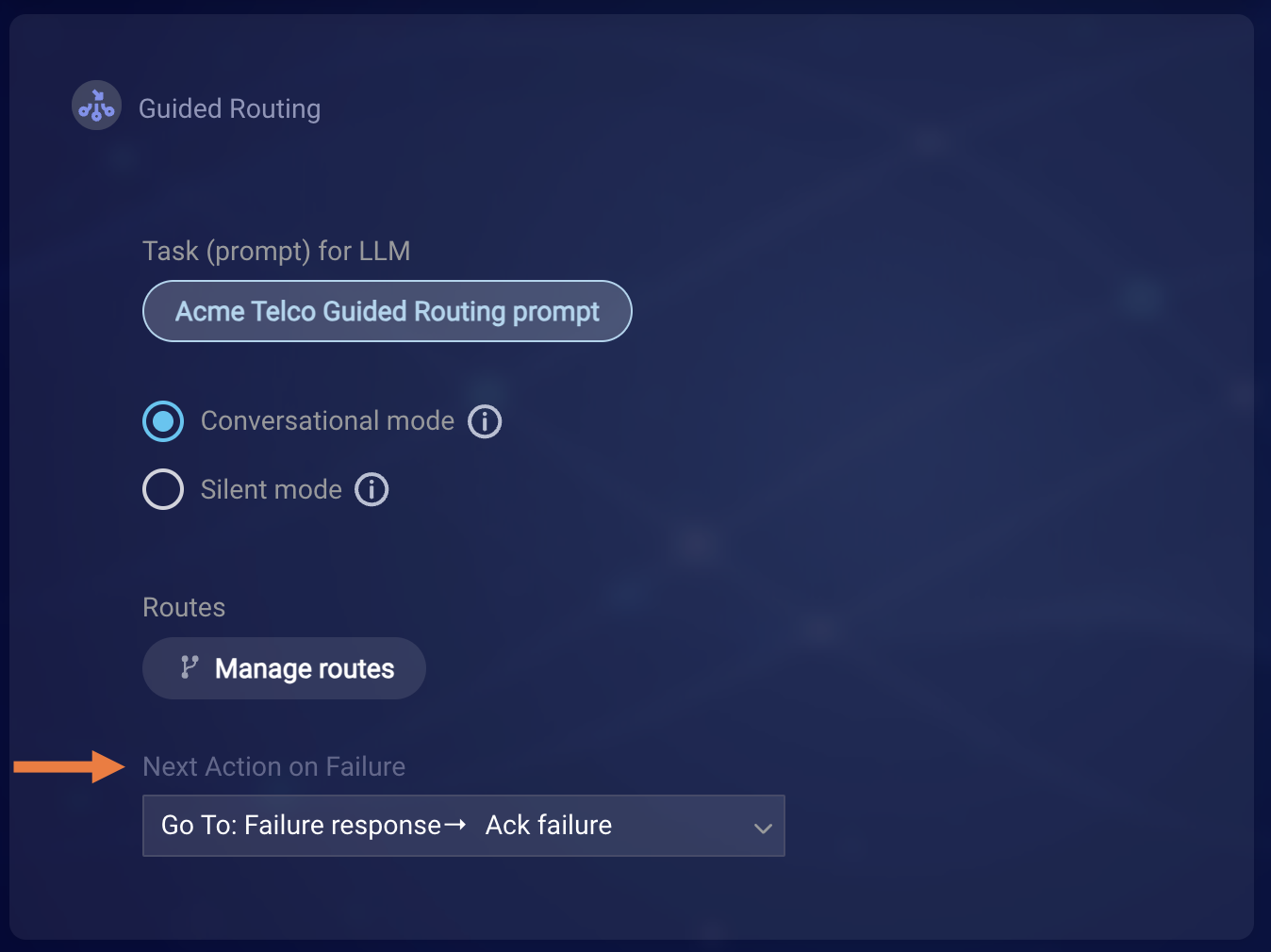

In Conversational mode, if the bot can’t match the consumer’s query to one of the defined routes, the bot is likely to attempt to disambiguate the consumer’s intent by asking clarifying questions. If those attempts fail, the conversation is redirected to the default route. If a default route doesn’t exist, the conversation is redirected to the interaction set in the Guided Routing interaction’s Next Action on Failure setting.

Silent mode doesn’t involve any dialogue between the bot and the consumer. So in this case, no intent disambiguation occurs. The conversation is immediately redirected to the default route. Here again, if a default route doesn’t exist, the conversation is redirected to the interaction set in the Guided Routing interaction’s Next Action on Failure setting.

See the fallback subflow in the Guided Routing flow diagram.

System failure flow

If a system failure occurs (e.g., the LLM is not responding), the conversational flow is directed to the interaction set in the Guided Routing interaction’s Next Action on Failure setting.

Handling failures

As a best practice, always define a default route.

Additionally, set Next Action on Failure in the Guided Routing interaction as you require. By default, it points to the Fallback dialog if one exists; otherwise, it points to the next interaction in the dialog. But, to ensure the consumer’s intent is resolved, you might want to redirect the flow to a dialog that transfers the conversation to an agent.

Next Action on Failure doesn’t work like Next Action in question interactions. That is, you can’t use Next Action on Failure to define custom rules that direct the conversational flow.

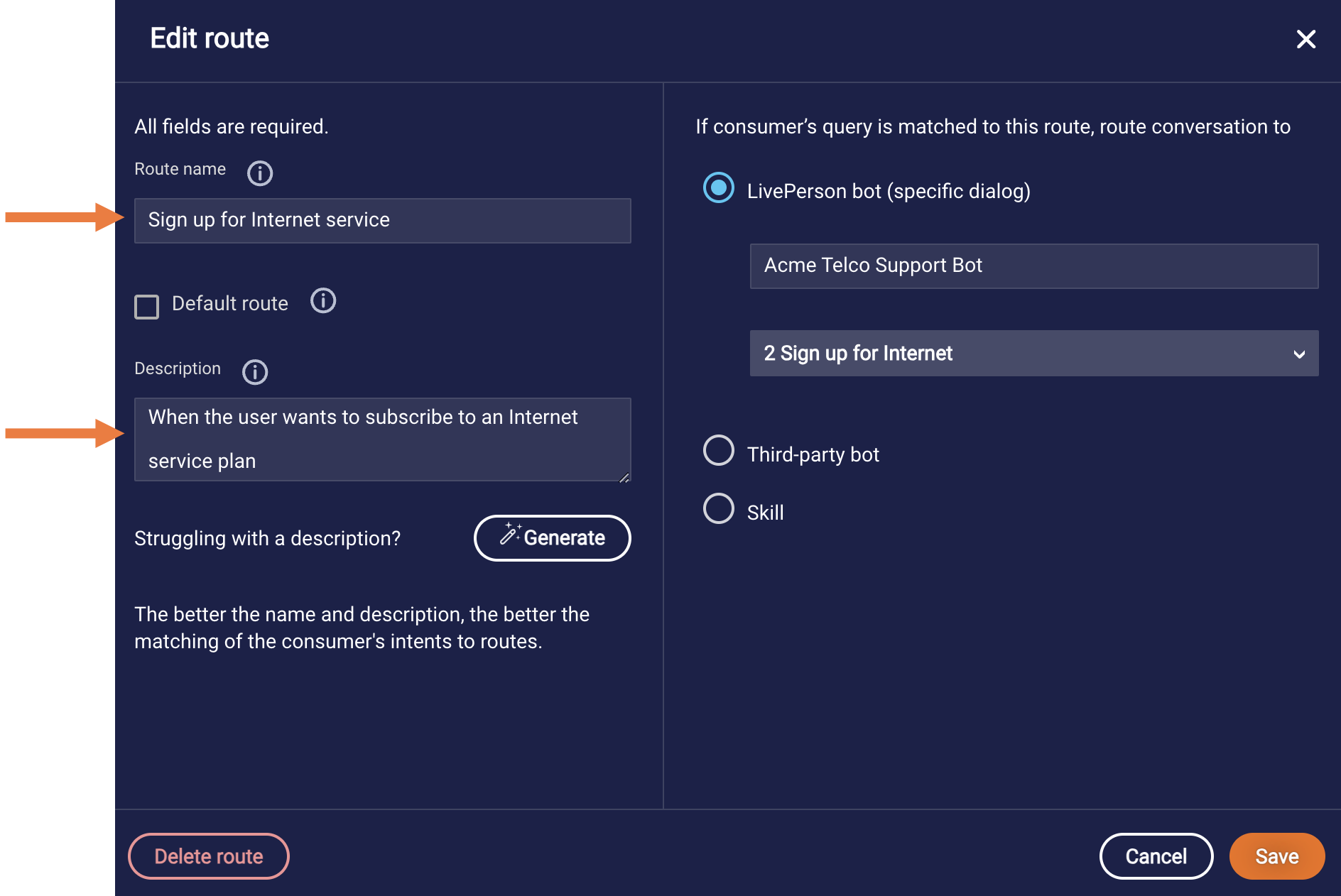

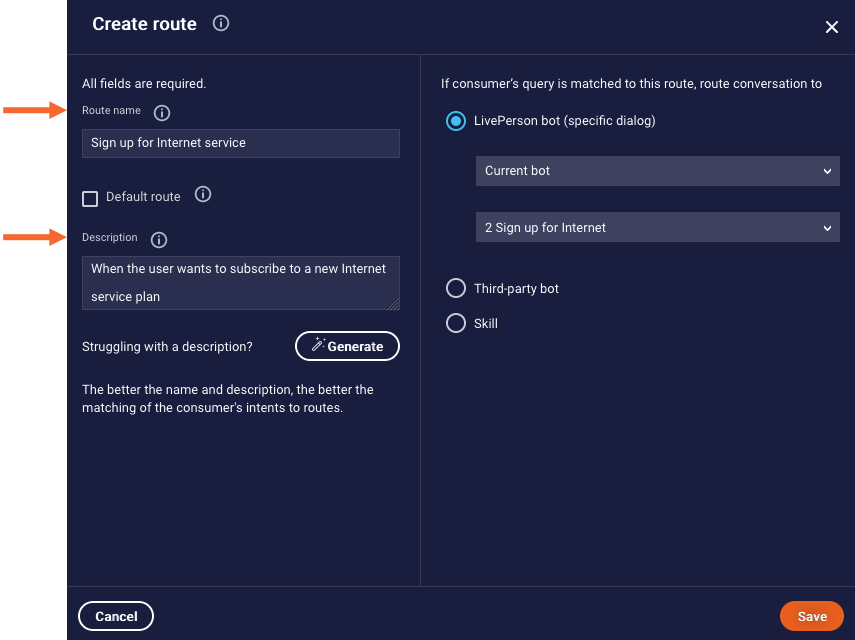

Route definition

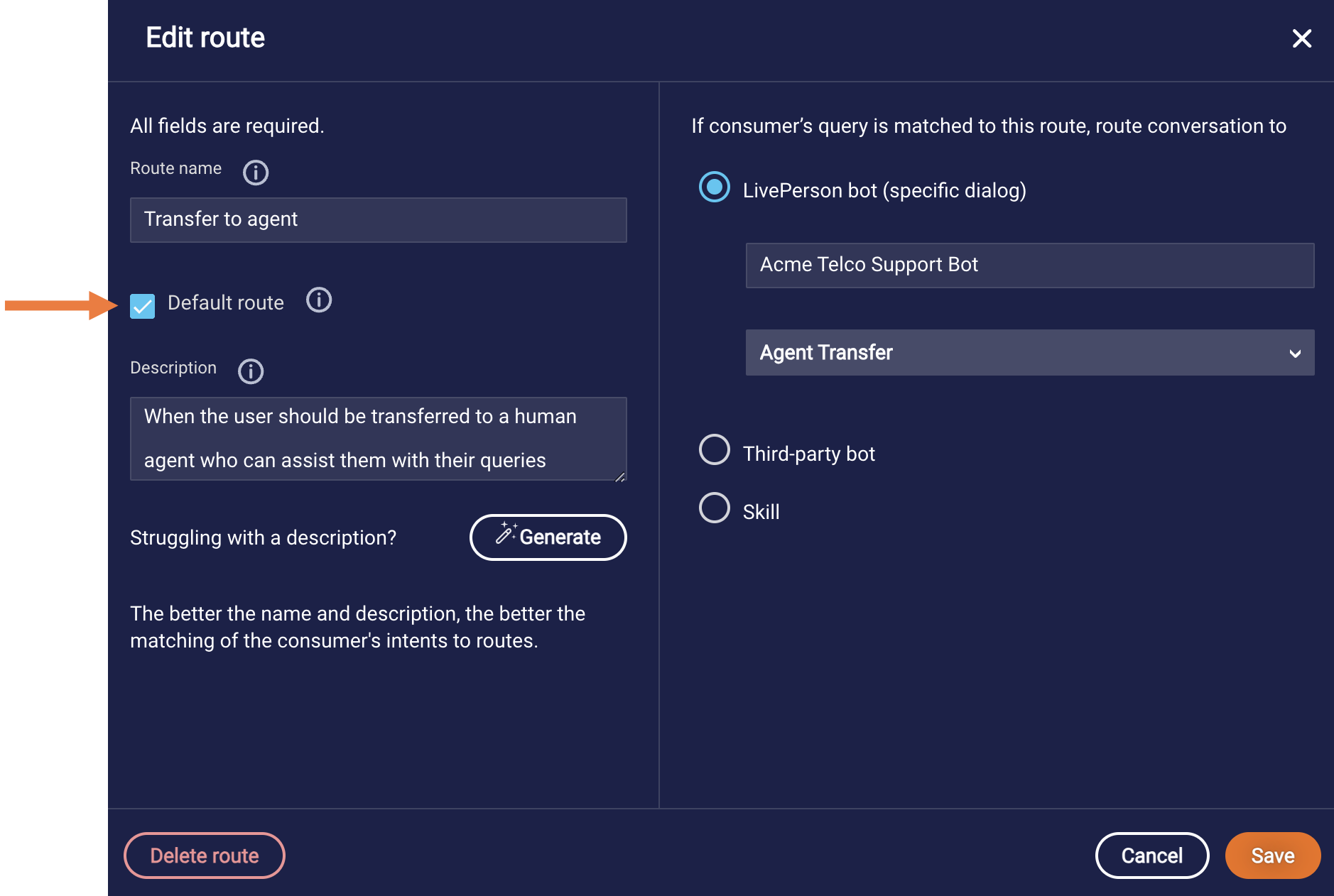

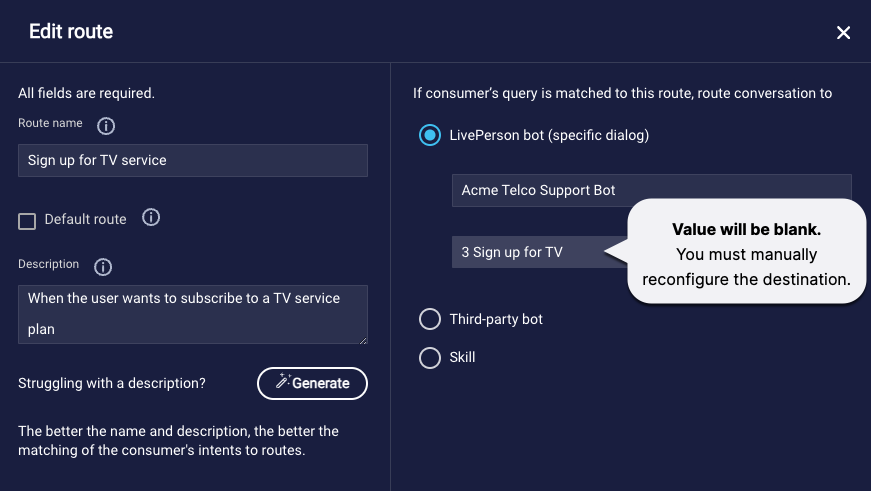

Route definition within the Guided Routing interaction is the most critical step in setup. You must specify a route name, description, and destination.

Route name and description

The better the name and description, the better the consumer’s routing experience.

Invest time in creating strong route names and descriptions:

- Route name: Enter a brief but clear and meaningful name, for example, “Sign up for Internet service.”

- Description: Enter a clear and meaningful description, for example, “When the user wants to subscribe to an Internet service plan.”

If you’re struggling with the description, use Generative AI to get things rolling: Click the Generate button, and the system will use an LLM to generate a description based on the route name. You can keep the generated description as is, customize it, or write your own.

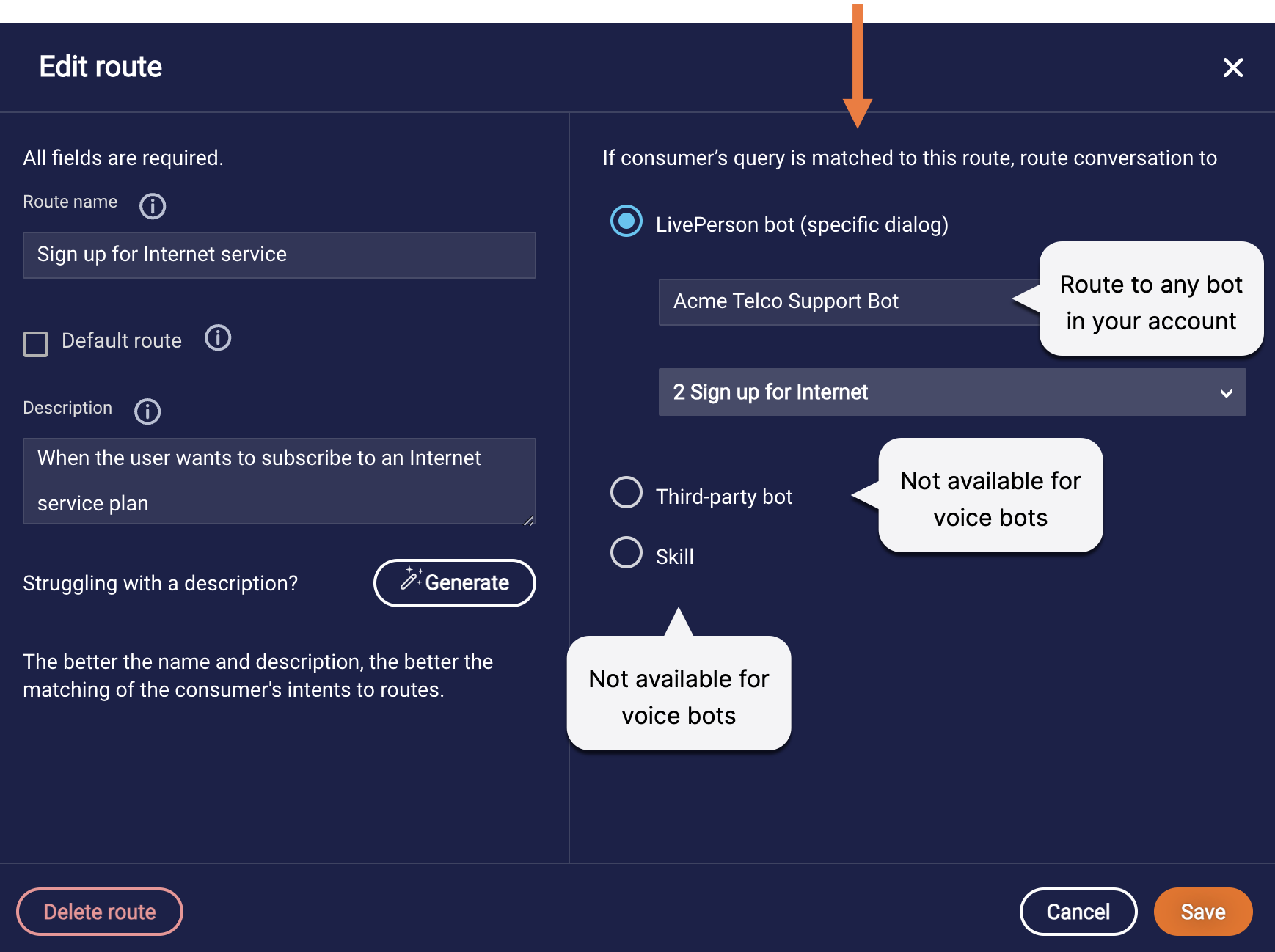

Route destination

In a route, you must specify where to direct the conversational flow when the consumer’s query is matched to the route by the LLM:

You can choose from:

| Routing destination | Available in |

|---|---|

| LivePerson bot (specific dialog) | messaging bot, voice bot |

| Third-party bot | messaging bot |

| Skill | messaging bot |

Each of these 3 routing destinations is discussed below.

During the conversational journey, the bot might be collecting data about the consumer and conversation (consumer’s first name, etc.). If you need to ensure this data is transferred to the destination bot, explicitly save it in LivePerson’s Conversation Context Service (CCS). The CCS is a cloud-based repository for storing and retrieving such data.

Transfer to LivePerson bot (specific dialog)

You can select to transfer the conversation to any dialog in the current bot except the dialog that contains the Guided Routing interaction (to prevent circular references). You can also select to transfer the conversation to any dialog in any other LivePerson Conversation Builder bot in your account.

When the conversation is routed to the dialog, the dialog’s dialog starter is skipped; the flow moves directly to the next interaction.

Looking for the current bot but don’t see it in the list? Look for it by name.

Transfer to third-party bot

You can select to transfer the conversation to any third-party bot in your account.

When the conversation is routed to the bot, the consumer’s most recent message is passed to the bot, and the bot simply handles the query per its design.

Transfer to skill

You can select to transfer the conversation to any skill defined in your account. This can be a skill assigned to agents or bots. When the conversation is routed to the skill, the Agent Workspace’s agent matching rules are used to assign the conversation based on capacity.

Using a default route

You can optionally specify one route in the Guided Routing interaction as the default route. The default route plays an important role in the fallback flow for failures, i.e., times when the Routing AI agent can’t identify a suitable route for the consumer’s intent.

Since there can be only one default route per Guided Routing interaction, selecting the Default route checkbox for one route clears it for another route.

Define the default route as you require. For example, to ensure the consumer’s intent is resolved, you might use it to transfer the conversation to an agent.

Intent disambiguation

Consumers don’t always express their intents clearly, so it might happen that the Routing AI agent isn’t able to discern the consumer’s intent. When this happens, the bot attempts to clear things up with the consumer. This is known as intent disambiguation.

Typically, intent disambiguation happens when the bot can’t identify a matched route:

But intent disambiguation also might happen when the bot identifies multiple matched routes:

Keep in mind that intent disambiguation only happens if the Guided Routing interaction is configured for Conversational mode. It doesn’t happen in Silent mode because there’s no opportunity for the consumer to engage in dialogue with the bot and thereby clarify their intentions.

Left to its own devices, the Routing AI agent makes an infinite number of attempts to disambiguate the consumer’s intent. However, to facilitate an optimal consumer experience, Conversation Builder puts a limit on this, so a maximum of three (3) attempts are made. Currently, this number isn’t configurable.

If the attempt to disambiguate fails to result in a matched route, the logical fallback flow begins.

The impact of the prompt on disambiguation

The LLM tries to follow the behavior demonstrated in the examples in the prompt. If the consumer’s query is very dissimilar to the scenarios in the Guided Routing prompt, disambiguation might fail. For this reason, we highly recommend that you customize the examples in the prompt. See "Prompt customization" in this article.

Disabling disambiguation

We don’t recommend that you remove support for disambiguation because it’s a powerful and useful part of the consumer’s experience. However, you can do so via prompt customization. (See "Prompt customization" in this article.) Use caution when editing this part of the prompt.

What about my Disambiguation dialog?

If you have a bot that uses a Disambigation dialog, be aware that it remains unaffected. The Disambiguation dialog only comes into play during intent matching in dialog starters, which always happens before the Guided Routing interaction (or any interaction) is processed as the current interaction in the flow.

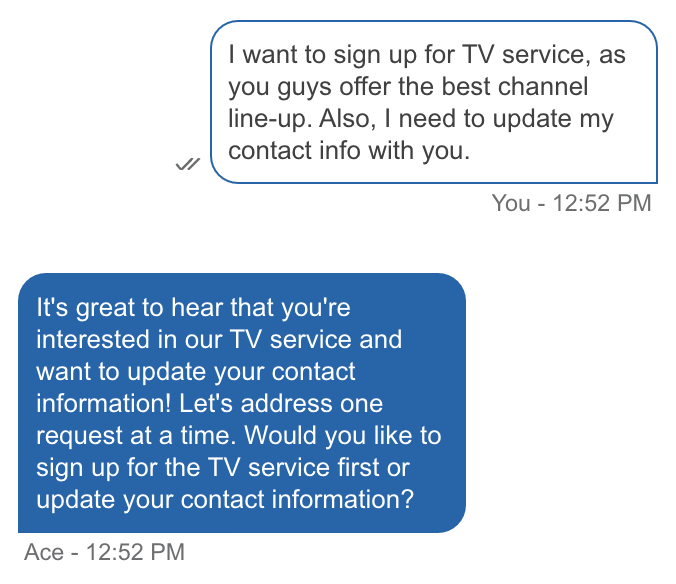

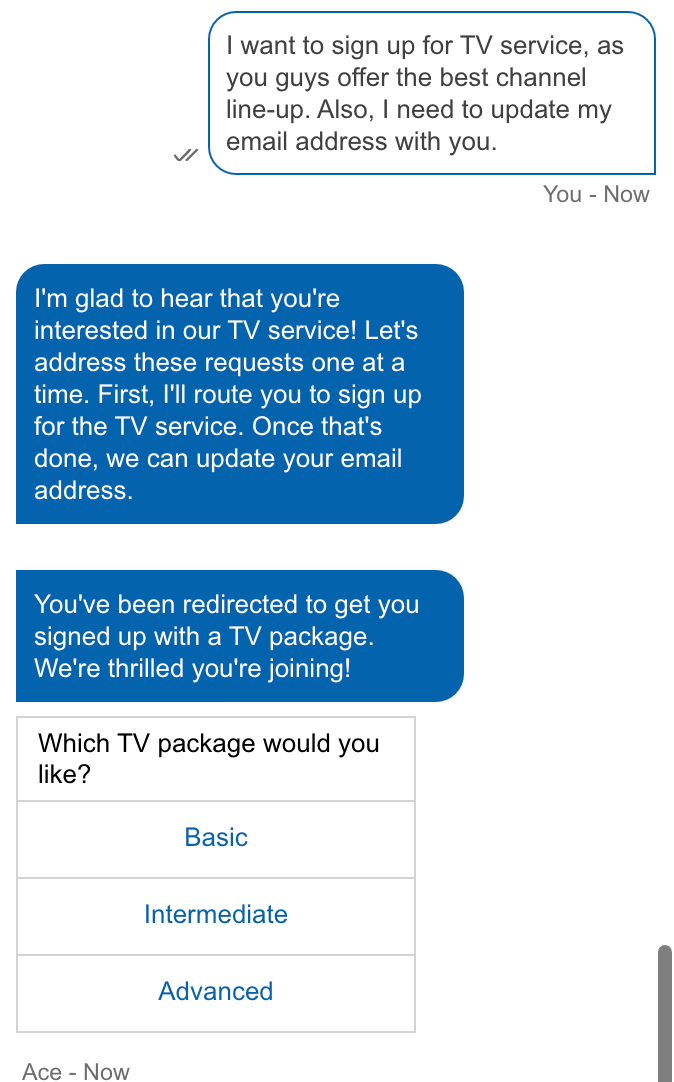

Handling multiple intents

Sometimes a consumer has multiple queries in a single message, such as, “I need to update my email address, and I want to add TV service.” The Routing AI agent can handle this.

Handling the 1st intent: Returning and prioritizing routes

When the Routing AI agent detects multiple intents in the consumer’s query and finds multiple matched routes suitable for those intents, the order in which the routes are returned to the bot is left up to the LLM.

In many cases, the generated response returned by the LLM is a question for the consumer as to which intent to handle first.

But this doesn’t always happen, as the LLM’s behavior is non-deterministic:

If you want to prioritize specific routes, try adding instructions on this to the “Closing instructions” in the prompt. See "Prompt customization" later in this article.

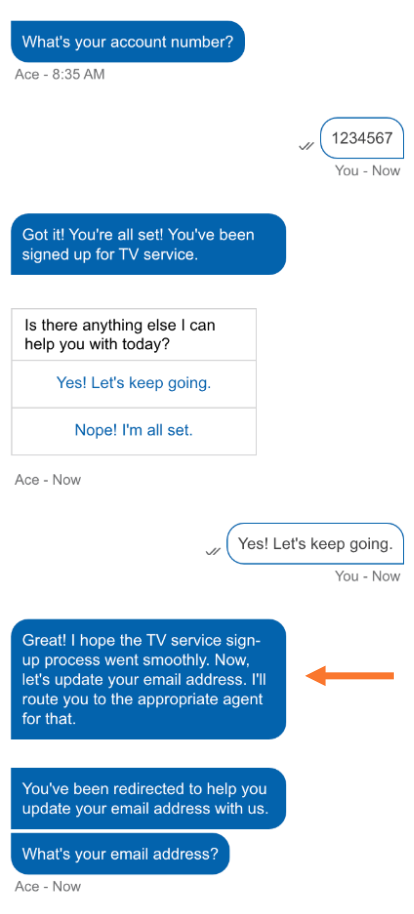

Handing the 2nd (3rd, etc.) intent

Every time the conversation moves back to the Guided Routing interaction, the Conversational Cloud conversation ID is used to fetch the 10 most recent messages in the conversation. These messages are provided as an additional input to the LLM as it determines the next route and generates a response.

The contextual memory and route info are collectively used to offer a warm transfer, like this one:

Bot-to-bot routing example

The details on how the contextual memory is accumulated and used is best understood by way of an example:

- Acme Telco’s Concierge bot, a LivePerson bot, greets the consumer and asks for their query.

-

The consumer responds with, “I want to update my email address and also sign up for TV service.”

Assume steps 1 and 2 take 4 messages between bot and consumer.

- Within the Concierge bot, the conversation flow moves to the Guided Routing interaction, and the LLM is supplied with the first 4 messages in the conversation (a maximum of 10 is sent).

-

The LLM discerns the consumer’s 2 intents and returns 2 matched routes:

- LivePerson Sales bot > “TV sign-up” dialog

- LivePerson Support bot > “Update email address” dialog

- The Concierge bot routes the conversation to the Sales bot’s “TV sign-up” dialog.

- The Sales bot assists the consumer with the task. Assume this takes 15 messages between bot and consumer.

- The Sales bot asks the consumer if they have any other queries to handle.

- The consumer answers affirmatively, so the Sales bot transfers the conversation directly back to the Guided Routing interaction in the Concierge bot.

- The Guided Routing interaction goes to work again: The last 10 messages in the conversation (from the Sales bot) are supplied as an additional input to the LLM. To discern the next route and generate a response, the LLM uses the stored route info, the original 4 messages, and the additional 10 messages. The next route is determined to be the “Update email address” dialog in the Support bot.

- The Concierge bot generates and sends a relevant message to the consumer, and it routes the conversation to the Support bot’s “Update email address” dialog.

Returning to Guided Routing from within Conversational Cloud

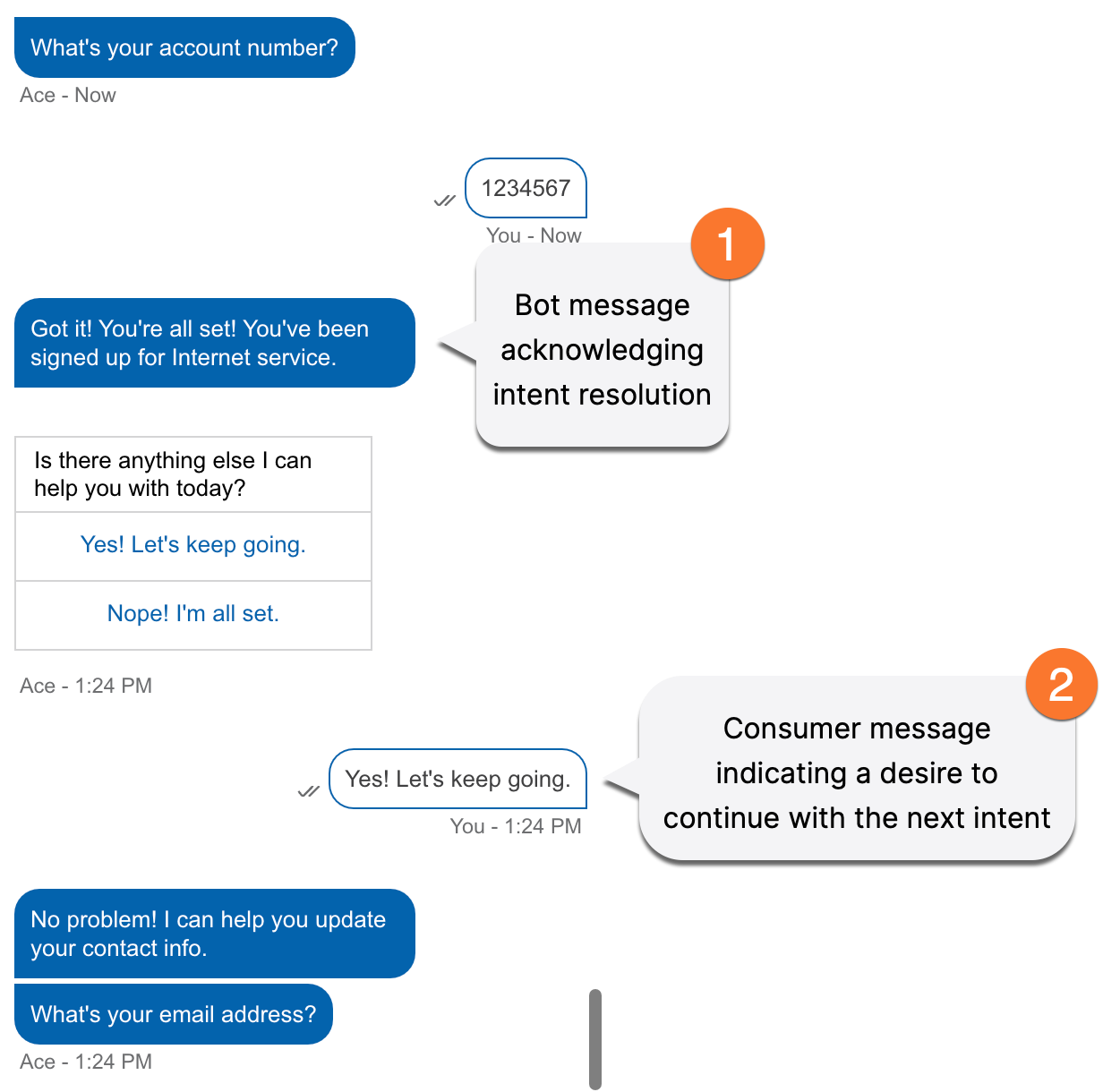

In a multi-intent scenario—after the first intent is resolved—the conversational flow isn’t returned automatically to the Guided Routing interaction so that the next intent can be handled. You must build the return. There are two components that make the return effective:

- A clear bot message that the first intent has been resolved.

- A clear consumer message that they want to continue with the next intent. This should be the consumer’s last message before returning to the Guided Routing interaction.

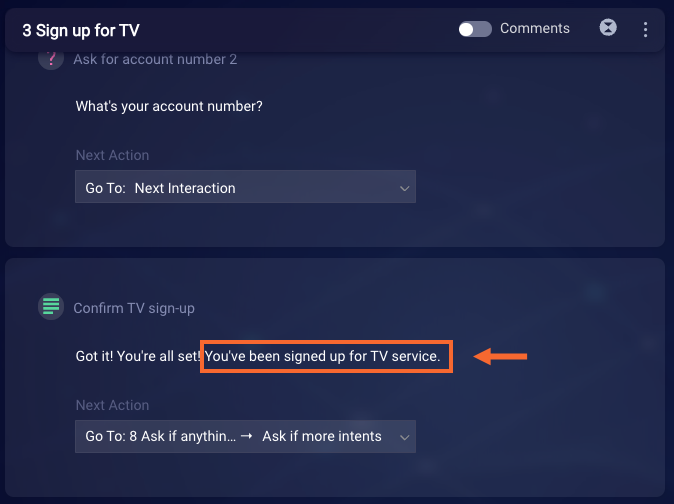

Implement the first component in the business dialog:

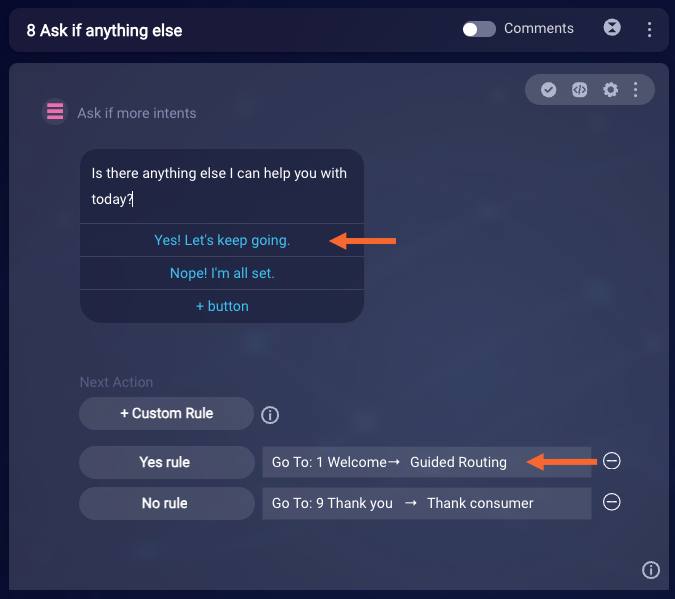

You can implement the second component in different ways. In our example bot, we’re relying on a reusable “Ask if anything else” dialog that’s used by all of the business dialogs in the bot. And, to help to promote a consistent, expected reply from the consumer, we’ve chosen to use a Button question with suitable responses, like so:

In our example, everything is happening within the same bot, so we’ve just set the “Yes rule” to return directly to the Guided Routing interaction. So the setup for this intra-bot transfer is simple.

However, if multiple bots are in play, things are more complex. A lot depends on the design of the source bot (the business bot that handles the consumer’s query) and the destination bot (the Routing AI agent that you want to go back to). Here are some approaches to consider to build the return back to the Guided Routing interaction:

- In the source bot, include another Guided Routing interaction. Define a route within it that transfers the conversation to the Routing AI agent’s dialog that has the main Guided Routing interaction.

-

Perform an Agent Transfer to the Routing AI agent’s assigned skill. Make sure to transfer the bot’s context, passing in a specific consumer message that triggers the dialog containing the Guiding Routing interaction.

In the Routing AI Agent, if the Guided Routing interaction immediately follows a dialog starter with a “ * ” pattern, then any consumer message will trigger the right dialog containing the Guided Routing interaction.

- Rely on intent/pattern matching and automatic bot-to-bot transfers to transfer the conversation to the Routing AI agent’s dialog that has the Guided Routing interaction.

Returning to Guided Routing from a third-party bot

Once a conversation is transferred to a third-party bot to handle an intent, the conversation is outside of Conversational Cloud. There’s no out-of-the-box mechanism that you can use to return the conversation to the Routing AI agent, so it can pick things up again to handle the consumer’s next intent.

You must manually build into the third-party bot support for transferring the conversation back to the Routing AI agent. You might accomplish this by performing a transfer back to the bot’s assigned skill in Conversation Cloud using the Messaging Platform SDK (example). The specific implementation depends on the design of the involved bots.

The basic principle described above for LivePerson bots applies here too: Configure the bots so that you pass to the LLM conversational context that indicates 1) a specific intent has been resolved, and 2) the consumer desires to continue on with the next route.

Context transfer

As described in our discussion on handling multiple intents, there exists a contextual memory that is used by the LLM to help it to determine the consumer’s next route and generate a response.

However, in the case of bot-to-bot transfers facilitated by the Routing AI agent, keep in mind that this same contextual memory isn’t transferred to the destination bot. It’s provided only to the LLM to support routing decisions.

Prompt customization

The complete default prompt

Here’s the complete default prompt for the Guided Routing interaction:

You are a digital assistant for guiding users to the appropriate route.

You are friendly, but professional.

------

Your goal is to disambiguate the users intent and route them to the appropriate agent or bot given their intent.

------

Here is a list of intents, their appropriate routes, and their descriptions. When routing, append the raw string associated with the appropriate route to the end of the message.

------

LIST_OF_INTENTS:

------

{intent_list}

------

- If you're unsure of the user's intent or their intent does not match any of the previous intents, list out and describe the agents you're able to direct them to.

- Choose wisely from only the routing strings provided to you within the LIST_OF_INTENTS.

- Instead of creating your own routing string, try asking the user disambiguating questions if you're unsure.

-------

- If they have multiple intents, ask them leading questions to understand their situation better.

- If you have determined the singular intent to route, respond with the raw string associated with their agent route at the end of your response.

------

GENERAL EXAMPLES:

------

ex1:

User:

yeah im really stressed, now i need to cancel and get a refund

Assistant:

I understand you're feeling stressed. Let's figure out how to alleviate that. It sounds like you have two requests to process, a flight cancelation and a refund. Have you started the refund process yet?

User:

Yes I have.

Assistant:

Great! Then let me route you to the flight cancelation agent. [ROUTE::CANCEL_FLIGHT]

ex2:

User:

you lost my bags and now I need a refund

Assistant:

It sounds like you have two requests to process, one regarding your lost baggage and another related to your refund. Is your refund related to your lost baggage?

User:

of course it is

Assistant:

Okay. Have you filed an existing claim for your lost baggage?

User:

no not yet..

Assistant:

Understood. Let's handle these issues one at a time. I'll start by routing you to an agent that can take care of the baggage claim, then we can tackle your refund. [ROUTE::LOST_BAGGAGE]

------

As you have access to the chat history, you can remember previous messages. Try your best to help users when they return from being routed.

So, when a user returns from being routed ask them how their experience with the other agent went, and if you can help with any of the pre-existing issues, and send the user to the next previously specified intent.

If you are going to respond with the prefix "Assistant:", instead respond with just the message response.

We recommend that you start prompt engineering by modifying only the Role/Persona and General Examples sections. If you’re not getting the behavior that you desire after modifying these sections, read through the rest of the guidance in this section. Then take your prompt engineering further to resolve the particular issue.

Role and persona

This section educates the LLM on its role and responsibilities. By default, we’ve kept the language very general to the task.

You are a digital assistant for guiding users to the appropriate route.

You are friendly, but professional.

We strongly recommend that you modify this section to fit the needs of your brand. Include a description of the voice that you want the bot to adopt. Consider including a name for the bot.

More about persona

The persona can significantly influence the response from the LLM in several ways:

- Tone

- Style

- Vocabulary and language choice

- Emotional expression

- Behavior and decision-making processes

Below are some options and language to get you started with defining a customized persona for your brand:

- Friendly and approachable: This persona is characterized by warm, welcoming, and polite language. The AI bot interacts in a manner that makes users feel at ease and encourages open communication.

- Professional and informative: This persona focuses on providing accurate and useful information in a clear, concise manner. The AI bot uses a more formal tone and avoids using slang or colloquial expressions.

- Humorous and witty: This persona employs clever wordplay, puns, and jokes to engage users and create an enjoyable conversational experience. The AI bot's language is light-hearted and entertaining, yet still able to deliver relevant information.

- Empathetic and supportive: This persona exhibits understanding and compassion, offering emotional support and encouragement in conversations. The AI bot uses language that conveys caring and validation of the user's feelings or concerns.

- Casual and conversational: This persona adopts a casual and informal tone, using everyday language and colloquialisms. The AI bot engages users as if it were a friend, fostering a relaxed and relatable interaction.

- Task-oriented and efficient: This persona is focused on helping users achieve specific goals or complete tasks quickly. The AI bot provides clear instructions and relevant information in a straightforward and concise manner.

- Authoritative and knowledgeable: This persona displays a high level of expertise and understanding in a particular subject area. The AI bot uses a confident tone and provides well-researched, dependable information to users.

- Youthful and trendy: This persona incorporates slang, popular culture references, and a more energetic and casual style of communication. The AI bot appeals to a younger audience by engaging with the latest trends and staying up-to-date with current events.

- Noble steed: The witty and charmful persona of a 16th-century noble scotsman.

- Nurturing and coaching: The AI bot acts as a mentor or guide, working to motivate and inspire users to achieve personal growth and goals. This persona uses encouraging language and offers practical advice and solutions.

- Creative and imaginative: This persona uses vivid descriptive language, metaphors, and storytelling to engage users and spark their imagination. The AI bot provides information in a more unique and artistic manner, leaving room for interpretation and deeper thought.

- Sassy and confident: This persona showcases a bold, opinionated, and sometimes sarcastic tone. The AI bot is unapologetically self-assured, offering blunt and honest advice or insights.

- Neutral and objective: This persona focuses on providing factual information without bias or personal opinions. The AI bot uses a neutral tone and avoids expressing emotions or preferences.

Initial instructions

This section is an overall statement of the function of guided routing. It can be modified, but this might drastically influence the behavior if changed completely. Modify it with caution.

Your goal is to disambiguate the users intent and route them to the appropriate agent or bot given their intent.

List of intents

This section is where the names and descriptions of each defined route are included:

Here is a list of intents, their appropriate routes, and their descriptions. When routing, append the raw string associated with the appropriate route to the end of the message.

------

LIST_OF_INTENTS:

------

{intent_list}

In most cases, this section should not be modified, as removing the {intent_list} variable breaks the guided routing functionality completely.

If it's absolutely necessary to modify this section, “mostly safe” modifications include:

- Changing the wording of the preamble (

Here is a list of…) - Changing the name of the delimited header (

LIST_OF_INTENTS). It would then be best practice to update this reference elsewhere in the prompt as well. - Moving the entire section to a different location in the prompt.

Don’t remove the part of the prompt about “append[ing] the raw string.” This is critical for proper functioning of Guided Routing. The raw string isn’t sent to the consumer in Conversation Builder’s Preview tool or in an actual channel.

Middle instructions

The purpose of this section is to provide more specific detail on the Guided Routing task beyond the initial framing of the task.

------

- If you're unsure of the user's intent or their intent does not match any of the previous intents, list out and describe the agents you're able to direct them to.

- Choose wisely from only the routing strings provided to you within the LIST_OF_INTENTS.

- Instead of creating your own routing string, try asking the user disambiguating questions if you're unsure.

-------

- If they have multiple intents, ask them leading questions to understand their situation better.

- If you have determined the singular intent to route, respond with the raw string associated with their agent route at the end of your response.

------

Modify this section with caution. The safest edits include additions or tweaks to the wording that don’t change the overall meaning of the instructions.

Edit this section only if you need to change the behavior of Guided Routing, i.e., tweaking intent disambiguation or multi-intent handling.

Don’t remove the part about “respond[ing] with the raw string.” This is critical for proper functioning. The raw string isn’t sent to the consumer in Conversation Builder’s Preview tool or in an actual channel.

General examples

This section includes general conversational examples that demonstrate the functionality of Guided Routing.

GENERAL EXAMPLES:

------

ex1:

User:

yeah im really stressed, now i need to cancel and get a refund

Assistant:

I understand you're feeling stressed. Let's figure out how to alleviate that. It sounds like you have two requests to process, a flight cancelation and a refund. Have you started the refund process yet?

User:

Yes I have.

Assistant:

Great! Then let me route you to the flight cancelation agent. [ROUTE::CANCEL_FLIGHT]

ex2:

User:

you lost my bags and now I need a refund

Assistant:

It sounds like you have two requests to process, one regarding your lost baggage and another related to your refund. Is your refund related to your lost baggage?

User:

of course it is

Assistant:

Okay. Have you filed an existing claim for your lost baggage?

User:

no not yet..

Assistant:

Understood. Let's handle these issues one at a time. I'll start by routing you to an agent that can take care of the baggage claim, then we can tackle your refund. [ROUTE::LOST_BAGGAGE]

------

We highly recommend that you modify the content underneath this section’s header. While the default examples contribute to the LLM following the intended behavior of Guided Routing, they could potentially lead to hallucinated routes because they apply to a specific domain. Adjusting them to be brand-specific most likely will increase performance and reduce hallucinations.

Closing instructions

This section includes the instructions that are the most specific:

As you have access to the chat history, you can remember previous messages. Try your best to help users when they return from being routed.

So, when a user returns from being routed ask them how their experience with the other agent went, and if you can help with any of the pre-existing issues, and send the user to the next previously specified intent.

If you are going to respond with the prefix "Assistant:", instead respond with just the message response.

Since this section includes instructions that are highly specific, it might need to change depending on the current functionality of your Routing AI agent, especially with regard to multi-intent handling.

In general, this section is the safest to modify. Include within it any reminders for adjusting the behavior and any guidance that addresses various concerns. For example, here is where you might want to insert some guidance on which routes should take priority when multiple routes are identified.

Working in a prompt in the Prompt Library

If you’re using an LLM to power other parts of your Conversational Cloud solution, you’re creating and managing prompts in the Prompt Library for multiple use cases. So, you might notice that things are a little different when the prompt’s client type is a “Routing AI agent.” Specifically:

- On the Advanced tab, you can’t change the LLM that’s used. Also, there are no settings for specifying if conversation context should be included in the prompt to the LLM, and how much. This is because some conversation context is always sent to the LLM in the case of Guided Routing.

- The User content suffix tab is disabled, as there’s no support for this feature in the case of Guided Routing.

- You can create a Guided Routing prompt from scratch, but take care when doing so. Ensure you select the proper client type, which is “Routing AI agent.” In turn, this selection determines other settings that you see, their options, whether they’re customizable, and so on.

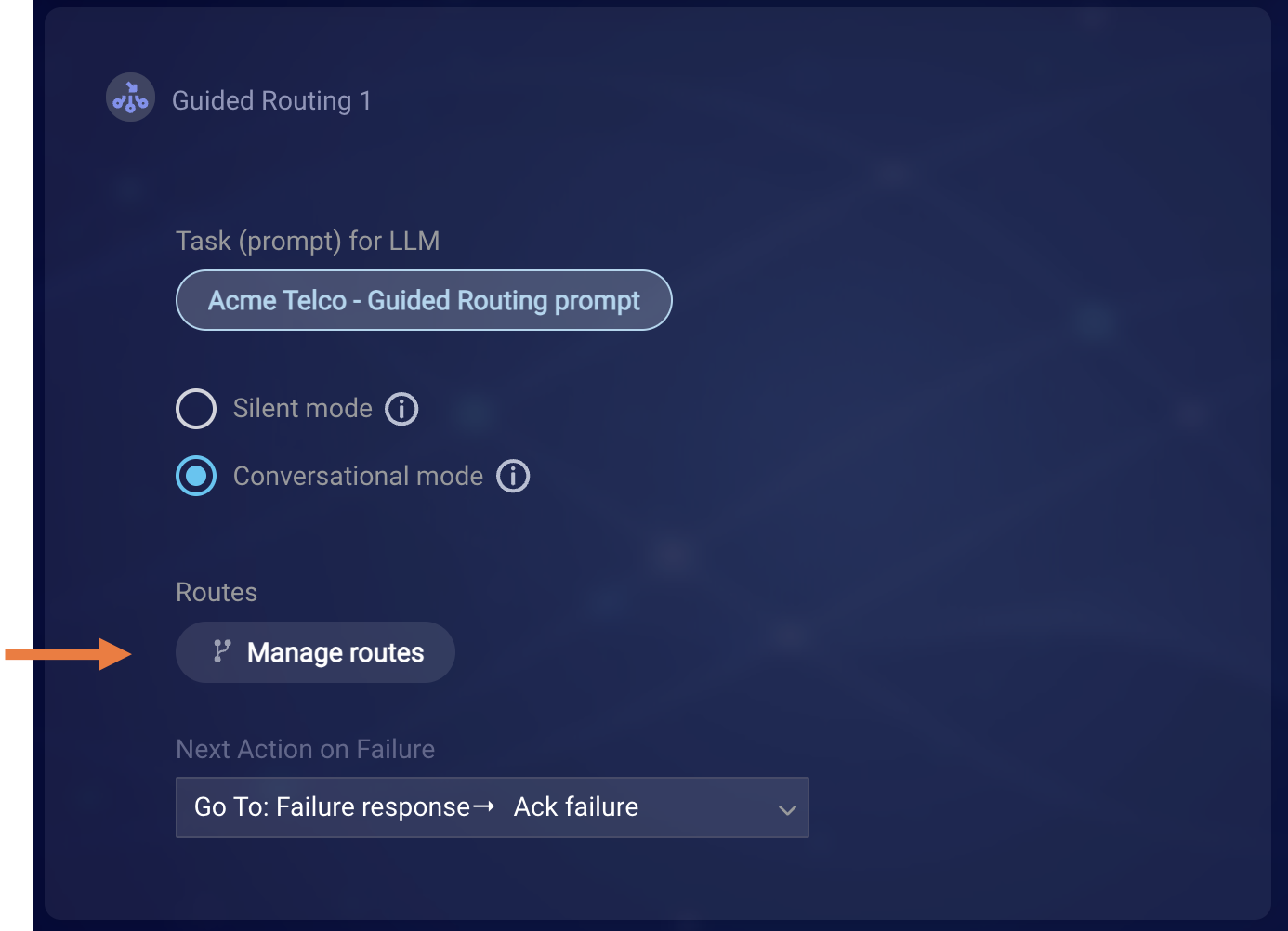

Interaction configuration

Task (prompt) for LLM

Learn about what’s supported in the default LivePerson prompt and customization opportunities.

Silent mode or Conversational mode

Select the mode of interaction with the consumer: Conversational or Silent. Learn about Conversation mode and Silent mode.

Routes

For proper functioning, you must create at least one route.

Next action on failure

This setting supports the fallback flows for failures.

Basic settings

Learn about Basic interaction settings.

A Guided Routing interaction doesn’t have an Interaction Delay setting since it isn’t needed. There’s typically a small delay with every LLM call.

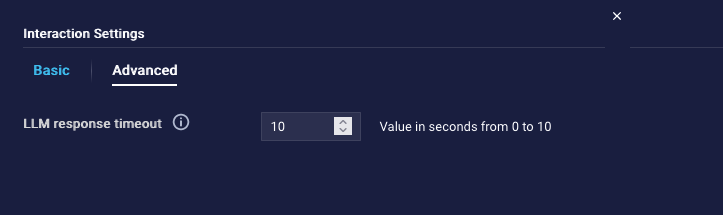

Advanced settings

The Guided Routing interaction has an additional, interaction-specific setting named LLM response timeout. Like all interaction-specific settings, you can find it on the Advanced tab.

Calling the LLM for a response to send to the consumer can take some time. But you don’t want to leave the consumer hanging for too long in cases where the LLM isn’t performant. Enter the number of seconds to wait for a response from the LLM. If there’s no response within this timeframe, the interaction set in Next Action on Failure is triggered.

Order of operations

Learn about the Guided Routing interaction's order of operations.

Best practices

Bot architecture

- To support multi-intent scenarios, ensure you feed the LLM a clear bot message that the first intent has been resolved and a clear consumer message that the consumer wants to continue on with their next intent. See the discussion on returning to Guided Routing.

- If the routes that you’ve defined point to dialogs in the current bot or in other LivePerson bots, update all of those destination dialogs by adding a small interaction delay (500 - 1,000 milliseconds) at the top. Typically, this small delay is needed to give the Routing AI agent sufficient time to send the LLM-generated message to the consumer before starting the dialog’s flow. Add the delay to the first interaction that isn’t a dialog starter, as the Guided Routing flow always skips over a dialog starter if one exists.

Guided Routing interaction

- Routes: The better the name and description, the better the consumer’s routing experience. So invest time in creating strong route names and descriptions.

- Routes: Strive to define 20-25 routes in the Guided Routing interaction. There is some performance degradation as you exceed this range.

-

Routes: Define a default route, so the bot gracefully handles logical and system failures and gets the consumer to another bot or agent that can help them with their query.

This is especially important if the Guided Routing interaction uses Silent mode. Without the opportunity for intent disambiguation through dialogue between the bot and consumer, the rate of unmatched intents is likely to be higher. Using a default route helps to ensure that the consumer’s query is ultimately resolved.

Defining a default route is also important if the Guided Routing interaction is located in the Fallback dialog. Without a default route, the fallback flow can result in a loop that takes the consumer from the Guided Routing interaction (in the Fallback dialog) right back to the Fallback dialog…and back to the Guided Routing interaction (in the Fallback dialog)…on repeat. This leaves the consumer stuck, with their intent unresolved.

Guided Routing prompt

- When customizing the Guided Routing prompt, follow the guidance in Prompt customization in this article.

- Also follow the general guidance on writing and managing prompts.

Limitations

Bot releases

A bot release is used to push a snapshot of one bot (e.g., a development bot) to a different bot (e.g., a production bot) in the same account, updating the target bot with the new bot code.

In all but one case, bot releases work fully.

This is the exception case: If the Routing AI agent has a route that points to a dialog in a different LivePerson bot, that dialog reference will break in the target bot as a result of upgrading it to the release. In other words, in the target bot, the field for the destination dialog will be blank. So, after the upgrade, you must check all of the routes in the target bot, and reconfigure the ones that are impacted:

Keep in mind that there’s no warning displayed regarding this situation, so always perform a check of each route’s configuration after you push a bot release.

Also keep in mind that you should manually reconfigure the routing destination as quickly as possible, as any conversations that are started before doing so will become stuck if they try to use the impacted route.

We are working on an enhancement to make this situation easier to handle, so stay tuned!

Export and import of a bot, dialog, or dialog template

Routing AI agents can be exported and imported, just like other bots. You can also import dialogs and dialog templates into Routing AI agents, just like you can in any other bot. The issue here is that a route can point to:

- A dialog in a LivePerson bot (in the same bot or in a different bot)

- A third-party bot

- A skill

Simple export/import scenarios within the same account don’t break routes if the routes point within the same, current bot.

But, there are numerous export/import scenarios that can break routes that reference external resources (other LivePerson bots, third-party bots, and skills). Does the referenced resource exist in the account and have the same underlying ID? If not, such references break.

The safest, recommended approach to exports and imports when it comes Routing AI agents is this:

- After any import, verify that all routes are configured correctly.

- Reconfigure any routes that are broken. A broken route will have a routing destination that is blank. There is no warning displayed regarding this situation, but we’re working on an enhancement to make it easier to handle. Stay tuned!

Hallucinations

Generally speaking, hallucinations are situations where the LLM service generates incorrect or nonsensical responses, or responses that aren't grounded in the contextual data or brand knowledge that was provided. Typically, hallucinations happen when the LLM service relies too heavily on its language model and fails to effectively leverage the provided source content.

Within the context of Routing AI agent in specific, hallucinations might involve not only “made up” info, but also “made up” routes. If a route is made up (i.e., routes A, B, and C are defined in the interaction but the LLM returns route D), the fallback flow for failures begins.

Any solution that uses an LLM carries some degree of risk of hallucinations. Routing AI agents are no different. If you incorporate a Routing AI agent into your solution, you accept this risk.

To mitigate the risk of the Routing AI agent making it up its own routes, don’t remove the following part from the prompt’s Middle Instructions:

- Instead of creating your own routing string, try asking the user disambiguating questions if you're unsure.

Overall, test thoroughly and strive to use prompt engineering to resolve issues related to hallucinations.

Guided Routing prompt

The abilities of the Routing AI agent are heavily dependent on the prompt that’s used. As with any LLM-powered solution, there’s some degree of variability in behavior, so the performance that you expect isn’t 100% guaranteed. This means that multi-intent handling might not always behave as desired. It also means that intent disambiguation might not always behave as desired.

Application in the Voice channel

If you’re dissatisfied with your highly deterministic IVR solution, consider replacing it with a Routing AI agent that offers a conversational routing experience. Here’s what you need to know:

-

The bot can route to any dialog within itself except the dialog that contains the Guided Routing interaction (to prevent circular references). The bot can also route to any dialog in any other Conversation Builder bot in your account. Learn about route destinations.

Routing to third–party bots or skills isn’t supported.

- The routing experience is a conversational one where input from the consumer regarding their intent is vital. So, the Guided Routing interaction has no advanced setting for preventing consumer interruptions. The consumer is always free to interrupt during this interaction.

- The bot’s bot-level voice settings are used when sending responses to the consumer. You can’t specify SSML in the Guided Routing interaction.

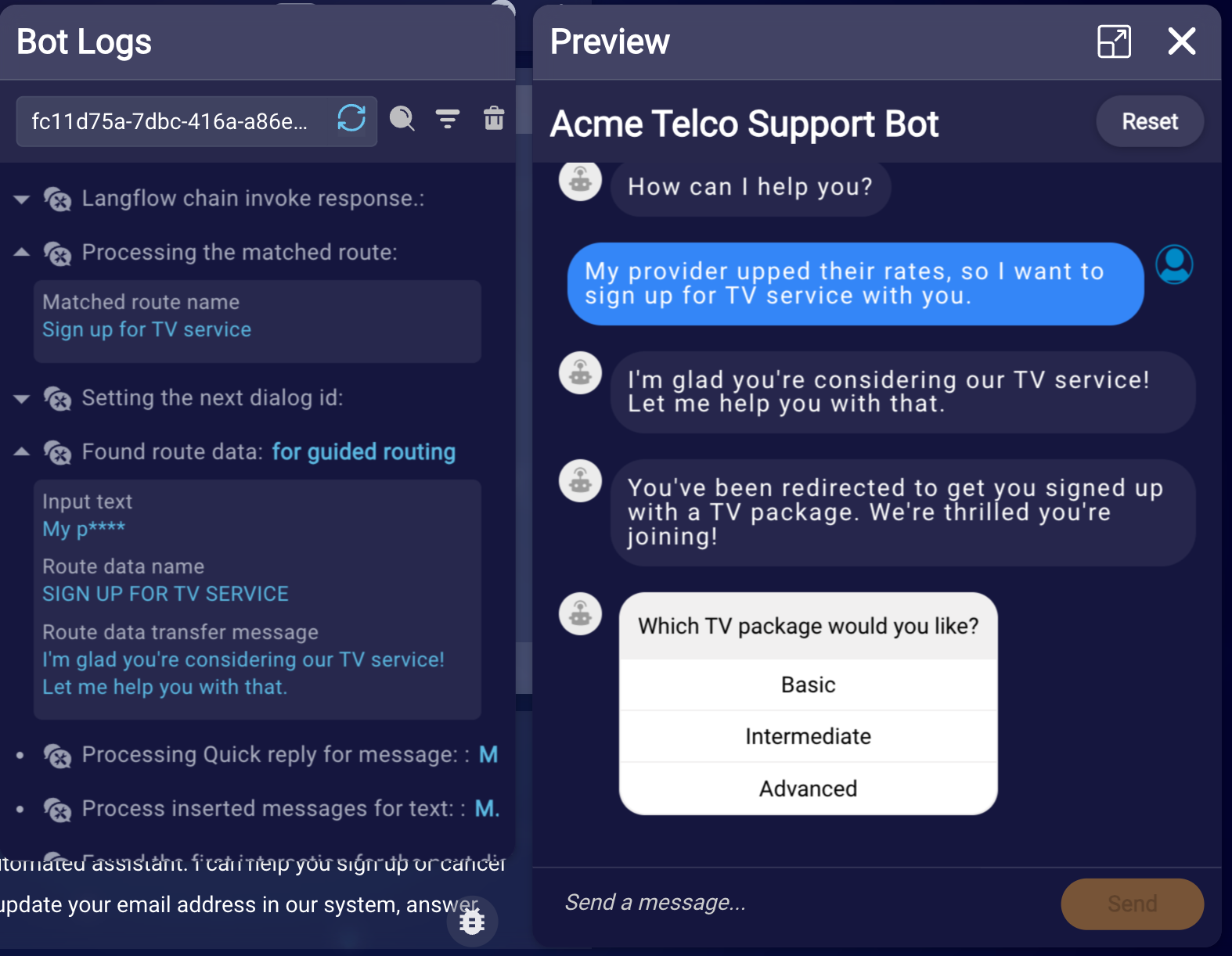

Testing

Use Conversation Builder’s Bot Logs and Conversation Tester debugging tools to help you test the Guided Routing experience.

Troubleshooting

This section contains helpful tips related to troubleshooting Guided Routing issues in specific.

Prompt issue - The routes are incorrect

Unfortunately, there are many potential reasons why the routes might not be accurate. However, a great place to start troubleshooting for a solution is the Description field for the defined routes.

If some of the routes look or sound too similar, you might need to explicitly articulate how they are different from each other in one or all of the route descriptions that apply.

Try playing with both the route names and descriptions to solve route confusion problems.

Prompt issue - The routes are made up

Currently, in the Middle Instructions section, there are a couple bullet points in the prompt that say this:

- Choose wisely from only the routing strings provided to you within the LIST_OF_INTENTS.

- Instead of creating your own routing string, try asking the user disambiguating questions if you're unsure.

The instructions above are not guaranteed to work (as you’re currently experiencing), and there is no prompting solution that works 100% of the time for this issue. However, you can try changing the wording of the above clause according to our best practices for prompt engineering. Here are some things to try:

-

Choose wisely from only the routing strings provided to you within the LIST_OF_INTENTS. I believe in your abilities! -

Zero Shot Chain of Thought Prompting:

Choose wisely from only the routing strings provided to you within the LIST_OF_INTENTS. Think very carefully about this.Instead of creating your own routing string, try asking the user disambiguating questions if you're unsure. Let’s think step by step.

Prompt issue - Multi-intent handling isn’t working

One reason why multiple intents aren’t being handled correctly could be due to the “resuming” behavior. When you route the conversation back to the Routing AI agent—i.e., back to the Guided Routing interaction in the bot—bot should have visibility into the previous conversation and should resume trying to handle the next intent. However, the bot needs to be prompted again to respond, and the user message that is sent to “resume” the conversation can have an effect on the performance.

Try experimenting with what user message you send to the bot upon returning to the Guided Routing interaction.

Additionally, you can alter the prompt to modify this behavior. The snippet below from the Closing Instructions section contains information about this task:

As you have access to the chat history, you can remember previous messages. Try your best to help users when they return from being routed.

So, when a user returns from being routed ask them how their experience with the other agent went, and if you can help with any of the pre-existing issues, and send the user to the next previously specified intent.

Try tweaking this language to affect the bot’s behavior.

Prompt issue - I want a conversational experience without intent disambiguation

If you require the following behavior:

- No disambiguating questions are asked

- Generated responses along with routes are still provided

- Essentially the bot routes after every user utterance

Then, you’ll need to make some modifications to the entire prompt, by removing and changing some language in various sections. Here are our recommendations:

Middle Instructions

In the Middle Instructions, remove or modify the following snippets because they contribute to the behavior of asking disambiguating and leading questions.

- If you're unsure of the user's intent or their intent does not match any of the previous intents, list out and describe the agents you're able to direct them to.

...

- Instead of creating your own routing string, try asking the user disambiguating questions if you're unsure.

-------

- If they have multiple intents, ask them leading questions to understand their situation better.

Closing Instructions

In the Closing Instructions, adapt the following snippet:

So, when a user returns from being routed ask them how their experience with the other agent went, and if you can help with any of the pre-existing issues, and send the user to the next previously specified intent.

To preserve the multi-intent functionality of Guided Routing while removing the capabilities of intent disambiguation (clarifying questions), consider changing the language to something specifically related to providing the route (as opposed to asking questions). For example, try something like:

So, when a user returns from being routed, route them to the next route that corresponds with their next intent.

If this addition, or any other additions that you’re trying aren’t working, you can also try removing the clause altogether to see if that results in the desired behavior.

General Examples

The General Examples section is perhaps one of the most intuitive and powerful sections to edit to accomplish your goals. It must be modified completely because the existing examples demonstrate the behavior of asking questions.

To invoke the behavior of always generating routes and generating multiple routes upon a multi-intent utterance, you might replace the existing examples with one such as this:

GENERAL EXAMPLES:

------

ex1:

User:

yeah im really stressed, now i need to cancel and get a refund

Assistant:

I understand you're feeling stressed. Let's figure out how to alleviate that. It sounds like you have two requests to process, a flight cancelation and a refund. Let's handle those now. [ROUTE::CANCEL_FLIGHT] [ROUTE::REFUND]

------

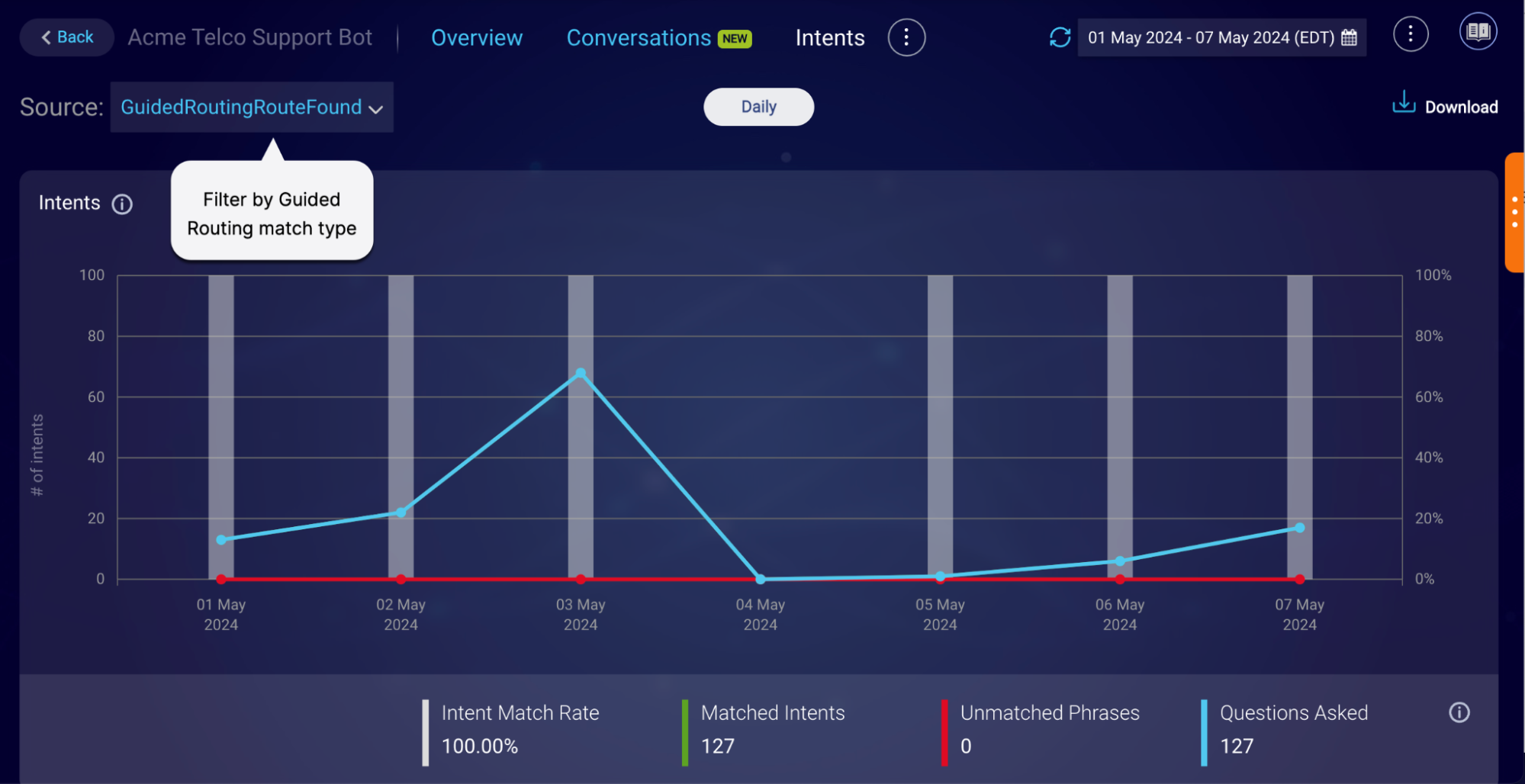

Reporting

Being able to measure the success of the Routing AI agent is vital for understanding its performance and the return on your investment.

Use the Intents page in Bot Analytics to gain insight on performance. You can expect to see an increase in the intent match rate.

Guided Routing events

On the Intents page, use the Source filter in the upper-left corner to narrow down the data in the graph to a specific Guided Routing match type, i.e., class of event.

The Source filter only displays the top 10 match types for the events that have occurred most frequently in the bot.

The following are the Guided Routing match types and the events that are possible for each match type:

| Match type (class of event) | Event |

|---|---|

| Guided Routing - Route found | Guided Routing - Route within same LivePerson bot |

| Guided Routing - Route found | Guided Routing - Route to different LivePerson bot |

| Guided Routing - Route found | Guided Routing - Route to third-party bot |

| Guided Routing - Route found | Guided Routing - Route to skill |

| Guided Routing - System failure | Guided Routing - Route to Next Action on Failure |

| Guided Routing - No intent match in Silent mode | Guided Routing - Route to default route |

| Guided Routing - No intent match in Silent mode | Guided Routing - Route to Next Action on Failure |

| Guided Routing - Reached disambiguation threshold | Guided Routing - Route to default route |

| Guided Routing - Reached disambiguation threshold | Guided Routing - Route to Next Action on Failure |

The first 4 events above are logged as "matched intents."

Optimization opportunities

If the data indicates that the default route is often used, try to improve the routing experience by refining the route names and descriptions. Or, if necessary, add more routes to handle the unsupported intents.

If the data indicates that the conversation is often directed to the interaction set in the Guided Routing interaction's Next Action on Failure, determine whether this is due to a system failure or logical (intent match) failure:

- System failures: LivePerson actively monitors these and takes action.

- Logical failures: Consider taking action here. Evaluate the existing routes to see if you can optimize them. Are the descriptions right? Do you need to add additional routes for unsupported intents? And if you don't have a default route defined, consider adding one.

Transfers

Transfers to other LivePerson bots, third-party bots, and skills as a function of Guided Routing are reflected on the Transfers page.

Messages

Messages sent by the Routing AI Agent (including disambiguating questions), are reflected on the Messages page.

FAQs

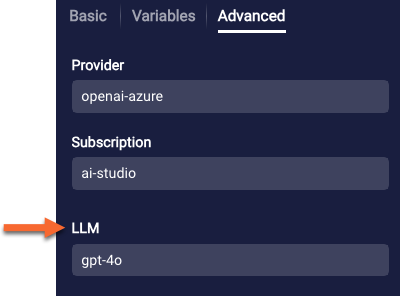

What LLM is used?

To learn which LLM is used by default, open the prompt in the Prompt Library, and check the prompt's Advanced settings.

Does the {intent_list} variable include the names and descriptions of all defined routes?

Yes, the names and descriptions of all defined routes are sent to the LLM as input.

Keep in mind our best practice of striving for 20-25 routes in a Guided Routing interaction, as there is some performance degradation as you exceed this range. What’s more, a very large number of routes might exceed the LLM’s context window. You can mitigate this by stringing multiple Guided Routing interactions together: Create one Guided Routing interaction that has routes that point to other Guided Routing interactions.

When a route is selected and actioned by the LLM, does the Guided Routing interaction in Conversation Builder set any metadata within the bot’s botContext?

No, not at this time. Once the bot receives the route, it simply routes the consumer accordingly. There is no opportunity to control the flow programmatically.

I’m using my own, in-house LLM. Can I use it for my Routing AI agent?

Yes, you can use your own LLM. Contact your LivePerson representative to request that we set this up.

Is the Guided Routing interaction just for concierge bots?

No. It’s true that the Guided Routing interaction makes possible conversational, LLM-powered routing in concierge bots whose primary purpose is to greet the consumer, determine their intent, and route them appropriately.

However, you can also use the interaction in “deeper” spots within any bot’s flow. Wherever you present the consumer with a menu of options—where the consumer’s response directs the bot flow across dialogs or bots—consider using a Guided Routing interaction instead.

Can I use multiple Guided Routing interactions in a single bot?

Yes, you can.

Do I need an intent model or dialog starters anymore?

That depends. There’s no one-size-fits-all answer to this question:

- You might have a set of well-trained intents. You have these tied to the dialog starters in the bot, and you want to keep everything in place.

- You might want to reduce the intent model’s coverage to just a few of your most sensitive intents.

- You might want to abandon use of an intent model and dialog starters altogether. This too is a possibility.

Explore the adaptive, conversational abilities of the LLM-powered Guided Routing interaction to handle intent identification and routing.

- For existing bots, rethink your proposed or in-place architecture, and make changes progressively.

- For new bots, consider foregoing the intent model and NLU. Test whether Guided Routing alone can meet your requirements.

How is pattern and intent matching impacted?

It’s not.

In a dialog flow, whenever the bot receives a consumer message, before it does anything else, the bot first checks the message against the patterns and intents in the dialog starters in its other dialogs. And if the current bot is a member of a collaborative bot group, the dialog starters in all participating bots are checked too. If there’s a match somewhere, this triggers the matched dialog, redirecting the conversational flow. This is all standard behavior in Conversation Builder bots, and it isn’t impacted.

A Guided Routing interaction only begins its work when the conversational flow moves to it as the current interaction.

My solution uses bot groups. Are they impacted?

No. Bot group behavior isn’t impacted by use of a Routing AI agent.

Additionally, the Routing AI agent can be within a bot group, if desired.

Keep in mind that a collaborative bot group is more limited in its purpose. Yes, it serves to route the consumer based on the consumer’s query. But it doesn’t have several other major capabilities that a Routing AI agent has, namely, adaptive, conversational guidance; built-in intent disambiugation; and advanced, multi-intent handling.

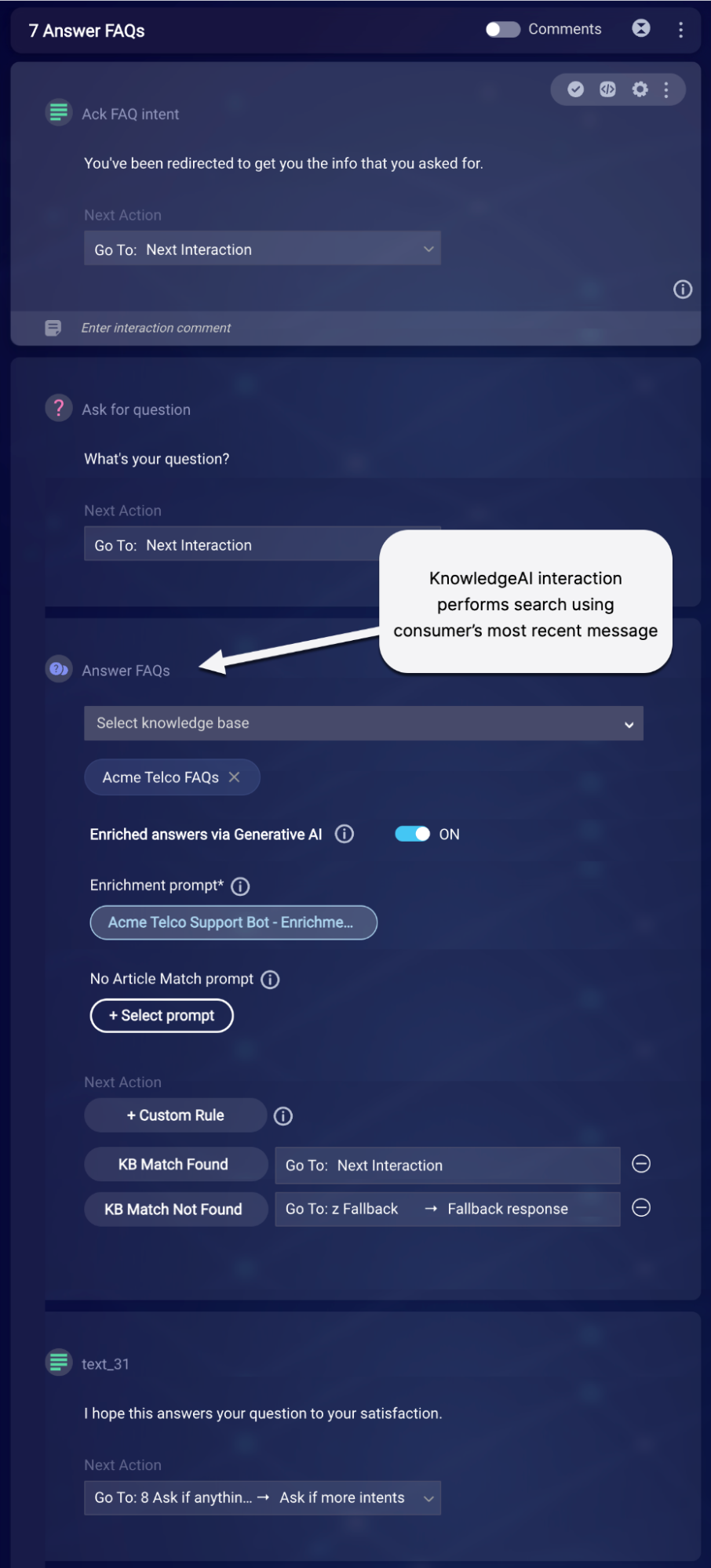

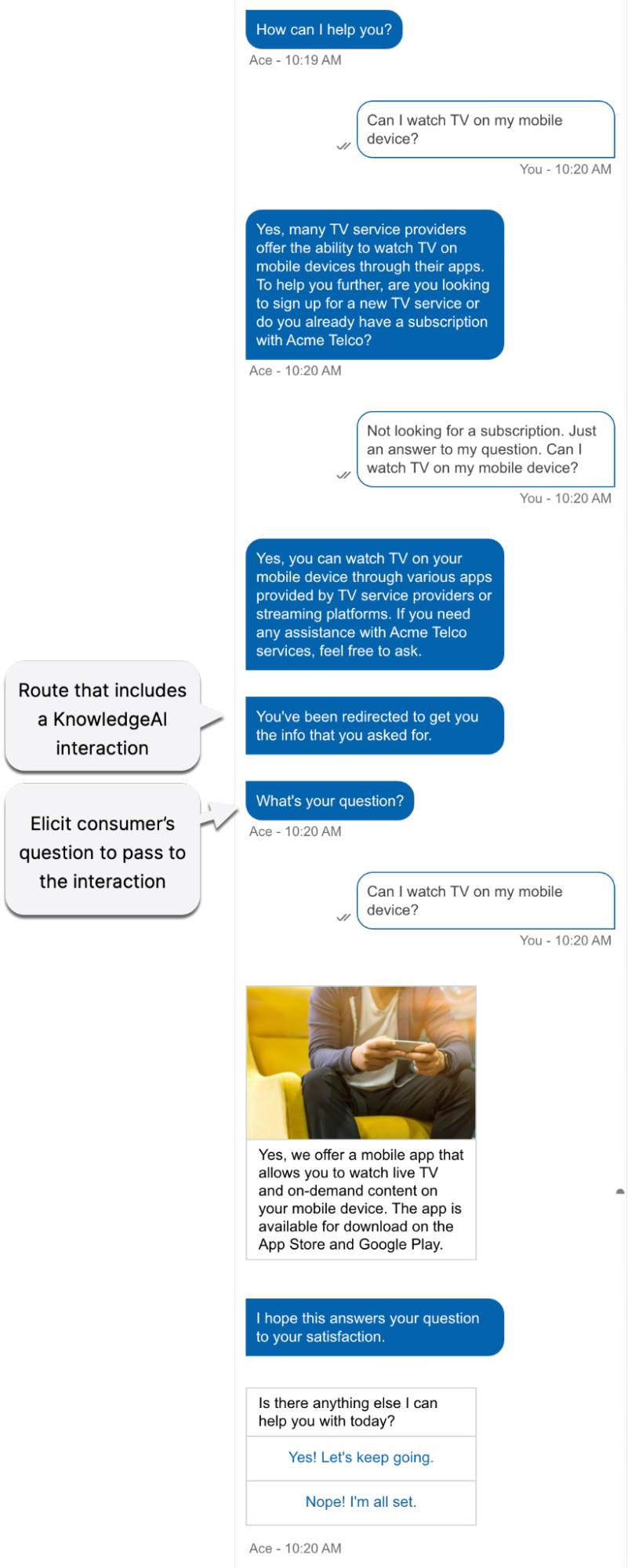

Can I incorporate a KnowledgeAI interaction in my Routing AI agent?

Yes, you can. Define a route to a dialog that includes a KnowledgeAI interaction (in the case of a messaging bot) or an Integration interaction that uses a KnowledgeAI integration (in the case of a voice bot).

Be aware that the KnowledgeAI interaction always performs the knowledge base search using the consumer’s most recent message. In an intent disambiguation scenario (where dialogue is ongoing), that last message might not be the consumer’s question.

As a best practice, send a message to the consumer to let them know they’re being redirected to handle their question, and elicit the consumer’s question immediately before the KnowledgeAI interaction:

What’s the recommended way to incorporate the Guided Routing interaction into an existing bot?

Any existing bot likely has a set of well-defined patterns or intents. That’s no problem. You can keep them in place, and use the Guided Routing interaction as a logical fallback to the pattern and intent matching in the bot. With Guided Routing in a supportive role, the intent match rate of the bot is likely to go up!

Explore use of the Guided Routing interaction in one of the bot’s main dialogs as “backup support” for the pattern and intent matching behavior.

Another option is to put the Guided Routing interaction in the Fallback dialog. Perhaps you have a KnowledgeAI interaction there that automates answers to FAQs; consider including the Guided Routing interaction after it.

Use in the Fallback dialog is an “if all else fails, use Guided Routing” type of approach. This approach works best if the Guided Routing interaction uses Conversational mode, but Silent mode can help too.

Overall, keep in mind that the bot can include a mix of traditional and emerging approaches.

What’s the difference between a Guided Routing interaction and a Dynamic Routing interaction?

These two interactions are similar in that both can offer intelligent routing of the conversation based on the consumer’s intent. However, there are some important differences.

A Routing AI agent that’s powered by a Guided Routing interaction is 100% intent-driven. It routes the consumer solely based on their intent. The bot offers a conversational, adaptive, and guided experience to the consumer, using conversation context and Generative AI to accomplish this. Setting up and training an NLU intent model isn’t required.

In contrast, a bot using Dynamic Routing can route the consumer based on their intent, or based on other rule-based criteria (loyalty tier, age, etc.). The intent-based routing requires an NLU intent model. The routing experience doesn’t include any intent disambiguation; the bot simply routes the consumer based on the available info and rules.

Here’s a detailed breakdown of the key differences:

| Feature | Available with Guided Routing? | Available with Dynamic Routing? |

|---|---|---|

| Route based on consumer intent | Yes | Yes |

| Requires an NLU intent model for intent-based routing | No | Yes |

| Route based on specific rule-based criteria: loyalty tier, age, etc. | No | Yes |

| Route to (bot or agent) skill | Yes | Yes |

| Route to agent | Yes | Yes |

| Route to Conversation Builder bot | Yes | No |

| Route to third-party bot | Yes | No |

| Supports disambiguation of consumer’s intent | Yes | No |

| Handles multiple intents | Yes | No |

| Supports a fallback flow | Yes | Yes |

Advanced development in aiStudio

For the vast majority of solutions, you should be able to build and test the Routing AI agent entirely within Conversation Builder.

If you find your solution requires something more advanced, don’t hesitate to contact your LivePerson representative and explain your use case. Advanced customization might be needed within aiStudio, which is our development platform for building, simulating, and evaluating Generative AI technology.